Open table formats Delta Lake, Apache Iceberg, and Apache Hudi the plumbing of modern data architectures. They turn chaotic object storage into queryable, transactional tables that teams can actually trust. In this article you’ll get a practical, no-nonsense comparison of the three, learn when to pick one over the others, and see how operational strategies like compaction, metadata design, and concurrency control change real-world performance.

Why open table formats matter (and why your CFO should care)

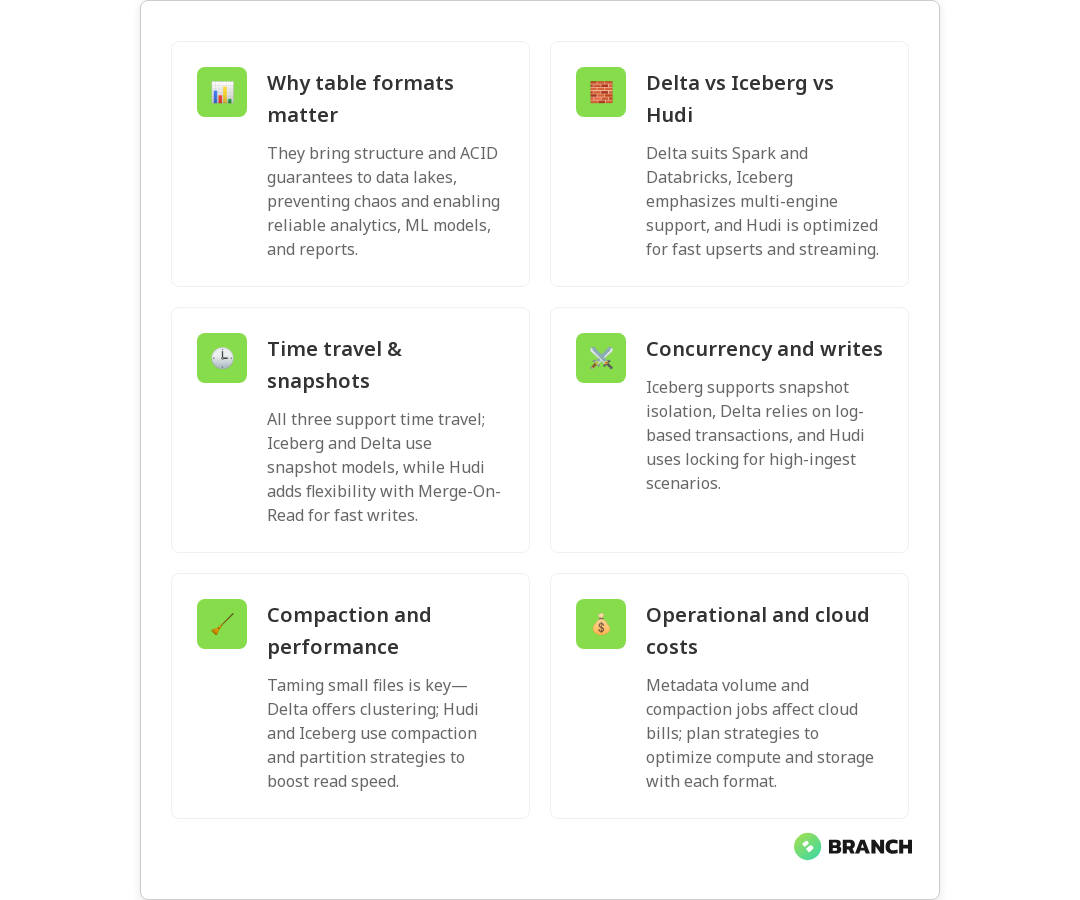

Data lakes without structure can become data swamps: lots of bytes, little reliability. Open table formats add a metadata layer and transactional guarantees think ACID on top of object storage so downstream analytics, ML models, and business reports don’t break when someone backfills a partition or updates a record. The benefit is both technical (faster, more efficient queries; time travel for debugging) and financial (less wasted compute, fewer emergency engineering dives at 2 am).

Different formats adopt different philosophies: Delta Lake started within the Databricks ecosystem, Iceberg emphasizes a snapshot-based, engine-agnostic approach, and Hudi focuses on ingestion latency and update patterns. For a clear architectural overview, Dremio’s breakdown is a helpful primer on how each format organizes metadata and snapshots: Dremio architecture.

Core concepts: table format vs file format

First, a short clarification often missed at meetings: a file format (Parquet, ORC, Avro) defines how rows and columns are encoded on disk. A table format (Delta, Iceberg, Hudi) defines how files are tracked, how transactions are coordinated, and how schema evolution and time travel are handled. Put simply: file formats store bytes; table formats manage the bytes and the story of those bytes over time.

Delta Lake’s blog gives a helpful explanation of how open table formats provide ACID transactions, time travel, and metadata handling that elevate raw files into trustworthy tables: Delta open table formats.

Feature-by-feature comparison

ACID transactions and metadata

All three support ACID semantics, but the implementations differ. Delta Lake uses a transaction log (a sequence of JSON/Parquet checkpoint files) and strong metadata guarantees; Iceberg uses a manifest and snapshot model that separates table metadata from files; Hudi maintains its own metadata and can operate in two table types Copy On Write (COW) and Merge On Read (MOR) which change how updates and reads interact.

Snapshotting, time travel, and reads

Iceberg’s snapshot-based design makes time travel and consistent reads across engines pretty straightforward. Delta also offers time travel and a robust log-based approach. Hudi’s MOR gives a hybrid option: fast writes with later compaction to optimize reads, which is great when ingestion latency and update frequency are high.

Concurrency and transactional models

Concurrency control matters when many jobs write to the same table. Iceberg emphasizes optimistic concurrency and snapshot isolation; Delta Lake’s log-based approach offers a strong transactional model across many engines (especially with Spark); Hudi uses locking and timeline services suitable for high-ingest patterns.

For a direct comparison of transactional handling and ingestion strategies, the LakeFS comparison is practical: Hudi vs Iceberg vs Delta comparison.

Updates, deletes, and CDC

If your use case requires frequent updates, deletes, or change-data-capture (CDC) downstream, Hudi and Delta historically have been strong because they emphasize record-level mutations and ingestion semantics. Iceberg has been catching up fast with features that make update/delete and partition evolution smoother while maintaining an engine-agnostic posture.

Compaction, small files, and performance

Small files kill read performance and increase metadata churn. Each format has strategies: Delta provides a Compaction API and Z-Order clustering options; Hudi supports compaction for MOR tables and other tuning knobs; Iceberg suggests effective partition specs and file sizing practices. AWS provides a practical guide to compaction and optimization techniques across formats when running on cloud object stores: AWS guide to choosing a table format.

Operational considerations and trade-offs

Picking a format is as much about operations as it is about features. Consider the following operational trade-offs:

- Engine compatibility: Iceberg was designed to be engine-agnostic and works well across engines (Spark, Flink, Trino). Delta is tightly integrated into Databricks and Spark but has grown wider through the Delta Open Source initiative. Hudi focuses on ingestion patterns and integrates well with streaming ecosystems.

- Operational maturity: Are your engineers already familiar with Spark-based optimizations? Delta and Hudi may be a smoother fit. If you expect to query from many engines, Iceberg’s snapshot model is compelling.

- Ingestion patterns: If you need low-latency upserts from streaming sources, Hudi’s MOR and write-optimized patterns are beneficial. If you mostly append data and prefer a clear snapshot lifecycle, Iceberg might be simpler to operate.

Costs and cloud considerations

File metadata operations and compaction jobs cost compute and sometimes drive storage metadata growth. Your cloud bill will reflect the choices you make: frequent small writes and inefficient file layouts increase both compute and egress costs. Plan compaction strategies, lifecycle policies, and monitoring to avoid surprises. For cost-related strategy tied to cloud operations, our cloud cost optimization guidance can be a big help.

Trends and where each project is heading

Open table formats are converging feature sets: Iceberg, Hudi, and Delta are borrowing good ideas from each other—better handling for updates, richer metadata services, and improved cross-engine compatibility. Expect faster innovation around metadata scaling, snapshot compaction, and cloud-native integrations. For a snapshot of architectural differences and evolving capabilities, Dremio’s analysis remains useful: Dremio architecture.

Choosing the right format for real-world use cases

High-level guidance to match format to need:

- Engine diversity and multi-tool querying: Choose Iceberg for broad engine compatibility and snapshot semantics.

- Frequent updates, upserts, and streaming ingestion: Choose Hudi for ingestion patterns that require low-latency record-level updates and flexible compaction strategies.

- Spark-native analytics and integrated tooling (and a tight Databricks fit): Choose Delta Lake for mature Spark integration, strong transaction logging, and features like Z-Order clustering.

In many enterprises, the right answer might be “it depends”—and sometimes teams run more than one format in the same ecosystem depending on workload types. The AWS comparison article gives actionable tips when selecting formats on cloud storage: AWS blog.

FAQ

What is meant by data governance?

Data governance is the set of processes, policies, roles, standards, and metrics that ensure effective and efficient use of data. In a data mesh, these responsibilities are federated across domains rather than centralized in one team.

What is the difference between data governance and data management?

Data management is the day-to-day operation of moving, storing, and processing data. Data governance defines the rules, roles, and policies that guide how data is managed and ensures it meets organizational requirements.

What are good data governance practices?

Best practices include clear ownership, standardized metadata, automated enforcement of policies, monitoring governance KPIs, and starting with a minimal viable governance approach that grows with domain maturity.

What are the three components of data governance?

Data governance typically consists of people (roles and responsibilities), processes (policies and workflows), and technology (tools and automation). In a mesh, these components are distributed and coordinated via a federated council.

What is a data governance framework?

A data governance framework defines the policies, standards, roles, and tools for managing and protecting data. In a data mesh, the framework emphasizes federation, metadata standards, and automation for scalable governance.

Final thoughts (the short version for the meeting with the execs)

If you need broad query engine compatibility and clear snapshot semantics, look at Iceberg. If you need record-level upserts, fast streaming ingestion, and flexible compaction, Hudi is compelling. If your stack is Spark-first and you value strong transaction logging and Databricks-synced features, Delta Lake is an excellent choice. Whatever you pick, add operational guardrails: compaction strategies, monitoring for small files, and clear schema evolution policies.

And remember: the format is a tool, not a destination. Align the choice with team skills, expected workloads, and long-term interoperability goals. If you want help mapping your use case to an implementation plan, our data engineering and AI teams can help build a practical roadmap and implementation strategy that keeps both engineers and finance people happy.