Stream processing is the engine behind real-time features: fraud detection, live analytics, telemetry from IoT devices, and any system that needs to act on events as they happen. Choosing between Amazon Kinesis, Azure Event Hubs, and Google Pub/Sub matters because each platform offers different guarantees, scaling models, and ecosystem integration — and those differences directly affect reliability, cost, and developer experience. In this article you’ll get a practical comparison of the three, guidance for picking the right one for your use case, and common pitfalls to avoid.

Why stream processing matters (and why it’s more than “messaging”)

Traditional batch processing is like checking a mailbox once a day: you get everything in one go and react afterward. Stream processing is more like watching an inbox that’s constantly refreshing — you can detect patterns, alert, and adjust in near real time. For businesses, that means faster customer experiences, reduced risk, and new product capabilities that simply weren’t possible with periodic processing.

Core concepts to keep in mind

- Throughput and partitions: How many concurrent events per second, and how the system shards data.

- Retention: How long messages are kept for reprocessing or late consumers.

- Ordering guarantees: Whether events are ordered per key/partition and whether exactly-once processing is available.

- Integration: How well the service plays with your compute, analytics, monitoring, and cross-cloud needs.

- Operational model: Fully managed convenience vs. control for custom tuning.

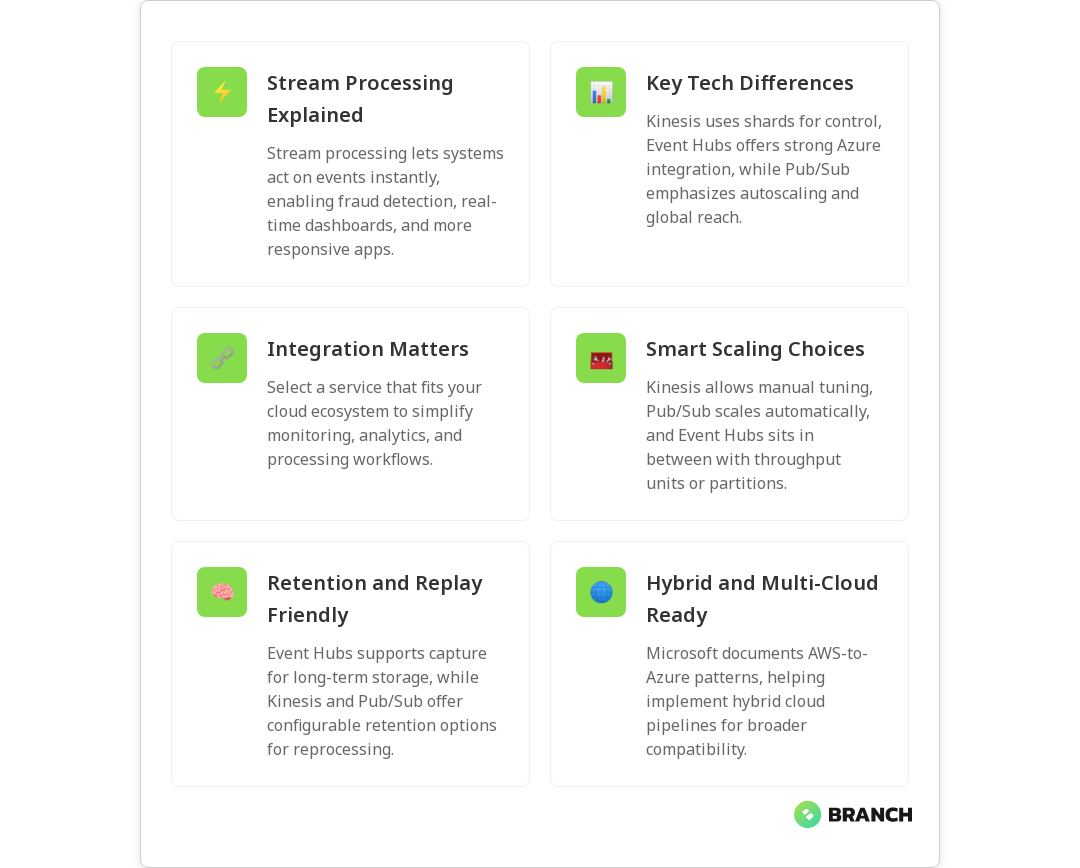

Quick overview: What each service is best at

Amazon Kinesis

Kinesis Data Streams is designed for very high write throughput and integrates tightly with AWS compute (Lambda, Kinesis Data Analytics, EMR, etc.). It scales by shards, each shard providing a set throughput. Kinesis also supports multiple consumers via enhanced fan-out and can persist events for replay. If your stack is AWS-centric and you need fine-grained throughput control, Kinesis is a natural fit.

Azure Event Hubs

Event Hubs is a scalable, low-latency event ingestion service built for Azure-first architectures. It offers partitioning, capture to storage, and strong integrations with Azure analytics (Azure Data Explorer, Azure Fabric’s Real-Time Intelligence). If you’re leveraging Azure analytics or want tight integration with Azure’s real-time tooling, Event Hubs is very compelling — Microsoft even documents patterns that connect AWS Kinesis as a source into Azure Eventstreams, which helps hybrid or multi-cloud scenarios.

Microsoft Learn: Add Amazon Kinesis shows practical integration steps if you’re mixing AWS and Azure services.

Google Pub/Sub

Pub/Sub is a globally distributed, fully managed messaging system with automatic horizontal scaling and a focus on simplicity and global reach. It’s a solid match when you need cross-region duplication, global routing, or serverless pipelines with strong autoscaling behavior. Pub/Sub’s model abstracts a lot of partitioning complexity away, which can be a benefit for teams that prefer to avoid manual shard management.

Low-level comparison: operational and technical differences

- Scaling model: Kinesis requires shard planning (though it can autoscale with tools), Event Hubs uses throughput units or partitions, and Pub/Sub abstracts scaling with automatic horizontal scaling.

- Retention: Kinesis lets you configure retention up to days (or extended via extended retention features), Event Hubs offers configurable retention and capture into storage for long-term retention, and Pub/Sub keeps messages for a default time with options for snapshotting and replay.

- Ordering and delivery: Kinesis and Event Hubs provide ordering within a partition/partition key; Pub/Sub can guarantee ordering with ordering keys but requires configuration. For exactly-once semantics, additional layers or processing frameworks are typically used.

- Integrations & ecosystem: Kinesis is native to AWS services; Event Hubs plugs into Azure analytics and real-time intelligence features (see Microsoft’s comparison of Azure Real-Time Intelligence and comparable solutions); Pub/Sub is tightly coupled with Google Cloud services like Dataflow.

- Latency: All three are low-latency, but perceived latency depends more on consumer architecture (serverless vs long-running processes), network hops, and regional configuration.

Microsoft Learn: Real-Time Intelligence compare explains how Event Hubs and Azure analytics work together, which helps when you plan an Azure-centric streaming pipeline.

Real-world selection criteria: pick based on business needs, not buzz

- Ecosystem alignment: If your systems live mostly in one cloud, default to that vendor’s streaming service — integration saves time and risk.

- Operational expertise: Do you have SREs who want to tune shards/throughput, or a small team that prefers hands-off scaling? Kinesis offers strong control; Pub/Sub offers the least operational overhead.

- Throughput predictability: For predictable high throughput, Kinesis’s shard model can be cost-effective. For spiky global workloads, Pub/Sub’s autoscaling can reduce headroom waste.

- Retention and replay needs: If you anticipate frequent reprocessing, choose a service with easy capture to durable storage (Event Hubs capture to storage is useful here).

- Multi-cloud and hybrid: If you need to stitch streams across clouds, plan integration layers early; Microsoft documentation includes patterns for bringing AWS Kinesis into Azure real-time pipelines, which is handy for hybrid scenarios.

Architecture patterns and processing frameworks

Choice of processing framework often matters as much as the messaging layer. Popular frameworks include Apache Flink for stateful stream processing, Kafka Streams where Kafka is used, and cloud-native options like Kinesis Data Analytics or Google Cloud Dataflow. You’ll commonly see these patterns:

- Ingest → Process → Store: Events land in the broker, a stream processor enriches/aggregates, results go to a database or analytics store.

- Capture for long-term analysis: Event Hubs’ capture feature or consumer-side writes to object storage are common to enable historical reprocessing.

- Lambda/event-driven: Serverless functions consume events for lightweight transforms, alerts, or fan-out tasks.

A practical note: many teams use a broker purely for ingestion and buffering, then run stateful processing in a framework that provides stronger semantics (checkpointing, windowing, state backends) to achieve exactly-once or low-latency aggregations.

Scott Logic: Comparing Apache Kafka, Amazon Kinesis, Microsoft Event Hubs is a helpful technical read on differences in retention and messaging behavior across systems.

Costs and performance tuning tips

- Shard/partition planning: Underprovisioning shards in Kinesis leads to throttling; overprovisioning wastes cost. Monitor throttles and consumer lag.

- Consumer scaling: For high fan-out, use enhanced fan-out in Kinesis or multiple consumer groups in other systems to avoid impacts to primary throughput.

- Batching and serialization: Batch small events where possible and choose compact serialization (Avro/Protobuf) to reduce bandwidth and cost.

- Monitoring: Instrument lag, throughput, and error metrics; set alerts on consumer lag and throttles.

Common challenges and how to avoid them

- Hidden ordering assumptions: Developers assume global ordering; always design for partition-level ordering and use keys accordingly.

- Late-arriving data: Implement windows with late data handling and retention strategies to reprocess if needed.

- Cross-cloud complexity: Integrating streams across providers introduces latency and additional failure modes — use documented connectors and test thoroughly (Microsoft’s docs show patterns for integrating AWS Kinesis into Azure Eventstreams).

When to choose each service — quick decision guide

- Pick Kinesis if: you’re heavily invested in AWS, need precise throughput control, and want tight integration with AWS analytics and Lambda.

- Pick Event Hubs if: you’re Azure-first, plan to use Azure analytics and capture features, or want a first-class integration with Azure real-time tools.

- Pick Pub/Sub if: you need global distribution, automatic scaling, and a simple model for serverless pipelines across regions.

Whichever service you choose, expect to combine the broker with a processing framework that provides the semantics you need (windowing, state, exactly-once) and plan for observability and reprocessing from the start.

Trends and what to watch

- Convergence with analytics: Cloud providers are blurring lines between ingestion and analytics (capture pipelines, integrated real-time analytics). Check provider docs to see best practices for integration.

- Serverless stream processing: More serverless processors and connectors are emerging to simplify devops.

- Multi-cloud streaming fabrics: Cross-cloud event meshes and connectors are maturing to support hybrid architectures, but complexity remains.

FAQ

What do you mean by stream processing?

Stream processing is the continuous computation of events as they arrive. Instead of waiting for batches, systems ingest events in real time, apply transformations or aggregations, and produce outputs or actions immediately. It’s the backbone of live dashboards, alerting systems, and many IoT and financial systems.

How is stream processing different from traditional data processing?

Traditional (batch) processing groups data and processes it at intervals. Stream processing handles each event — or small windows of events — continuously, which reduces latency and enables near-instant reactions. Architecturally, stream processing often requires different considerations for state management, windowing, and fault tolerance.

Why use stream processing?

Use stream processing when you need low-latency responses, continuous analytics, or the ability to react to events as they happen (fraud alerts, personalization, telemetry monitoring). It helps businesses reduce time-to-action and create features that rely on immediate context.

What is stream processing in IoT?

In IoT, stream processing handles high-volume telemetry from sensors and devices. It aggregates, filters, and analyzes the data in real time to detect anomalies, trigger actuations, or update dashboards. Given IoT’s scale and often spiky traffic, choosing a platform that can autoscale and handle throughput is crucial.

What is a stream processing framework?

A stream processing framework is the software layer that consumes events from a broker and performs stateful or stateless computations (windowing, joins, aggregations). Examples include Apache Flink and cloud-native services like Kinesis Data Analytics or Dataflow. Frameworks handle checkpointing, state management, and recovery semantics needed for reliable processing.