Choosing storage for a data lake feels a bit like picking a city to move to: you want scalability, good neighborhood integrations, reasonable taxes (a.k.a. cost), and a sense that your stuff won’t vanish overnight. In cloud terms, that decision usually comes down to Amazon S3, Azure Blob Storage, or Google Cloud Storage. Each has strengths for different data lake patterns, analytics workflows, and team skills. In this article you’ll get a practical comparison across architecture, performance, pricing patterns, security, and real-world trade-offs so you can pick the best fit for your next data lake.

Why object storage is the default for data lakes

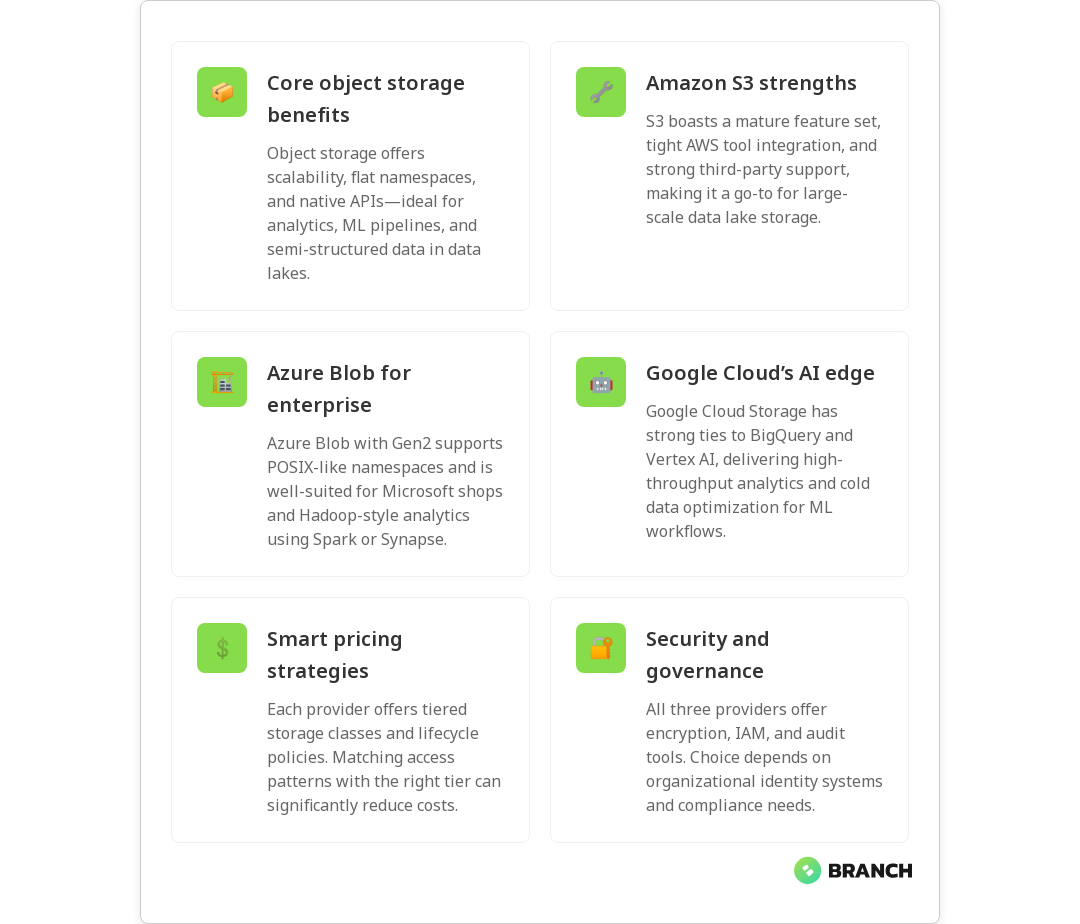

Modern data lakes are built on object storage because it can store massive amounts of unstructured and semi-structured data cheaply and reliably. Object stores provide flat namespaces, immutability options, lifecycle policies, and native APIs that work well with analytics engines, ETL pipelines, and machine learning systems.

All three major providers—Amazon S3, Azure Blob Storage, and Google Cloud Storage—are designed for high durability and availability and integrate tightly with their cloud ecosystems. If you want to compare vendor docs directly, see the product pages for Amazon S3, Azure Blob Storage pricing, and Google Cloud Storage.

Core differences at a glance

- Amazon S3 – Mature feature set, unparalleled ecosystem integration with AWS analytics tools, broad third-party support, and advanced object-level features (versioning, replication, lifecycle rules).

- Azure Blob Storage – Strong integration with Azure analytics (including Data Lake Storage Gen2 capabilities), hierarchical namespace option for POSIX-like behavior, and pricing tiers tuned for enterprise patterns.

- Google Cloud Storage – Seamless integration with BigQuery and Vertex AI, storage classes optimized for long-tail and cold data, and excellent performance for analytics workloads.

Scalability, durability, and consistency

All three platforms promise very high durability (typically 11 nines in S3 terms or equivalent) and scale virtually without limits. Where they differ is consistency and performance characteristics:

- S3: Strong read-after-write consistency for PUTs of new objects and eventual consistency for overwrite/delete in older timeframes, but AWS has improved consistency guarantees across regions and operations. Its worldwide adoption means many analytical tools have tuned connectors for S3.

- Azure Blob (with Data Lake Gen2): Offers a hierarchical namespace which can behave more like a file system—this helps directories, renames, and small-file handling common in Hadoop-style workloads. Consistency is strong for most operations within a storage account.

- Google Cloud Storage: Provides strong global consistency and is optimized for high-throughput analytics, often pairing nicely with BigQuery for serverless analytics over large datasets.

Data lake features and analytics integrations

The real power of object storage for data lakes is how it integrates with compute and analytics services.

- Amazon S3 integrates with AWS analytics (Glue, Athena, Redshift Spectrum, EMR) and many third-party tools. S3’s vast feature set includes selective replication (cross-region replication), object locking for immutability, and event notifications to trigger pipelines.

- Azure Blob + Data Lake Storage Gen2 targets big data workloads with a hierarchical namespace, making it friendly for Hadoop, Spark, and ADLS-native tools. It’s commonly used with Azure Synapse, Databricks on Azure, and Azure Data Factory.

- Google Cloud Storage is built to work smoothly with BigQuery, Dataproc, and Vertex AI. If your roadmap includes heavy use of BigQuery or Vertex AI for ML, GCS often feels like the path of least resistance.

Pricing patterns and cost control

Pricing is complex: object storage costs include storage per GB, request costs, data retrieval (for cool/archival tiers), and data egress. Each provider offers tiers that let you trade cost for access speed.

- AWS S3 – Multiple storage classes (Standard, Intelligent-Tiering, Standard-IA, Glacier) and pricing options. S3 Intelligent-Tiering can help manage unknown access patterns automatically.

- Azure Blob – Hot, Cool, and Archive tiers and Reserved Capacity options to lower storage costs for predictable needs. Azure’s tiering and lifecycle policies work well with Gen2 for analytics cost control; see the Azure Blob Storage pricing page for details.

- Google Cloud Storage – Standard, Nearline, Coldline, Archive tiers and seamless integration with long-term analytics; Google often markets lower costs for cold storage classes and strong network pricing for some patterns. Check the Google Cloud Storage page for the latest class descriptions.

Security, governance, and compliance

Security is table stakes. Each provider supports encryption at rest and in transit, role-based access, and logging. Differences emerge in tooling and integrations for governance:

- IAM and policies: AWS IAM is granular and mature; Azure uses role-based access control (RBAC) integrated with Active Directory; Google Cloud IAM also offers fine-grained controls and organization policies.

- Data governance: If you need enterprise governance frameworks, consider the provider whose ecosystem matches your identity and compliance needs (e.g., Azure for heavy Microsoft shops, AWS for broad third-party integrations).

- Auditing and data protection: All three support logging, object immutability, and encryption keys (including customer-managed keys).

Operational considerations and migrations

Operational realities often decide the winner more than theoretical benchmarks. Consider the following:

- Data transfer and egress: Moving petabytes between clouds is expensive. Plan initial ingress strategies (direct transfer appliances, edge ingest) and avoid unnecessary cross-cloud egress.

- Tooling and talent: Teams familiar with AWS will likely be more productive on S3; Azure-centric shops benefit from Blob + Gen2; Google-first data science teams often prefer GCS + BigQuery.

- Third-party ecosystem: S3 has the broadest third-party tool support, but Azure and GCS are catching up quickly with vendor integrations and managed services.

Multi-cloud and hybrid scenarios

If you need multi-cloud redundancy or a hybrid footprint, evaluate cross-cloud toolchains and metadata layers like LakeFS, Delta Lake, or Apache Iceberg to provide consistent semantics across stores. Each provider offers interoperability guidance—see Google’s comparison of AWS, Azure, and GCP services for mapping and migration considerations at GCP’s service comparison.

How to choose: decision checklist

- Is your analytics stack tied to one cloud (BigQuery, Redshift, Synapse)? Prefer the native provider.

- Do you need a hierarchical namespace for many small files? Consider Azure Data Lake Gen2.

- Are third-party tools and wide marketplace support critical? Amazon S3 remains the broadest choice.

- Do you expect heavy ML usage with Vertex AI or managed notebooks? Google Cloud Storage pairs well with those.

- How predictable are your storage costs? Explore reserved capacity and lifecycle policies on Azure and tiering options across providers to lower bills.

FAQ

Which is the best cloud storage?

There’s no single “best”—it depends on needs. Amazon S3 is often the safest choice for broad compatibility and mature features. Azure Blob (especially with Data Lake Gen2) is great for enterprise Microsoft shops and Hadoop-style workloads. Google Cloud Storage is ideal when you want tight integration with BigQuery and Vertex AI. Match the storage to your analytics stack, access patterns, and team skills.

What is the cost of 1TB cloud storage?

Costs vary by provider, tier, and region. Roughly speaking, hot/standard object storage might range from a few dollars to $25+ per month per TB depending on provider and tier, while colder tiers can be much cheaper (but charge for retrieval). Always check current pricing pages (for example, Azure’s Blob pricing) and model request and egress costs for your workload. See our Cloud Cost Optimization services for ways to keep bills under control.

Is cloud storage worth paying for?

Yes for most businesses. Cloud storage removes upfront hardware costs, gives near-infinite scalability, provides reliability, and offloads maintenance. For many teams the operational benefits—elasticity, integrated services, and managed durability—outweigh the monthly bill, especially when optimized with lifecycle rules and right-sized tiers. Our Cloud Infrastructure Services can help you implement best practices.

Which cloud storage is free?

No major provider offers large-scale object storage for free. Many provide limited free tiers or trial credits for new users, and there are free tiers for specific services (e.g., a few GBs of storage). For real-world data lakes, you should budget for paid storage and factor in lifecycle and access costs.

Is Google Drive a cloud storage?

Yes—Google Drive is a consumer-focused cloud storage product built for file syncing and collaboration, not for data lakes. It’s great for documents and small team files but lacks the scale, APIs, durability guarantees, and analytics integrations required for large-scale data lake architectures. For analytics workloads, choose an object store like Google Cloud Storage. Learn more in our Data Engineering Services.

Final thoughts

Picking between Amazon S3, Azure Blob Storage, and Google Cloud Storage is less about declaring a winner and more about matching platform strengths to your business, tooling, and cost profile. Prototype, measure, and plan lifecycle policies up front. If you need help making that architecture decision or executing a migration, Branch Boston’s teams can help design a secure, cost-effective data lake strategy that aligns with your analytics and AI goals.