Change Data Capture (CDC) is the quiet hero behind real-time dashboards, synced microservices, and analytics that don’t feel ancient the moment they’re displayed. Whether you’re building a customer 360, powering event-driven apps, or keeping a data warehouse fresh, CDC helps systems propagate only what changed — fast and efficiently. In this guide you’ll get a clear view of what CDC is, how it works, implementation patterns, common pitfalls, and practical tips to adopt it without turning your DBAs into caffeine-fueled detectives.

Why CDC matters for modern businesses

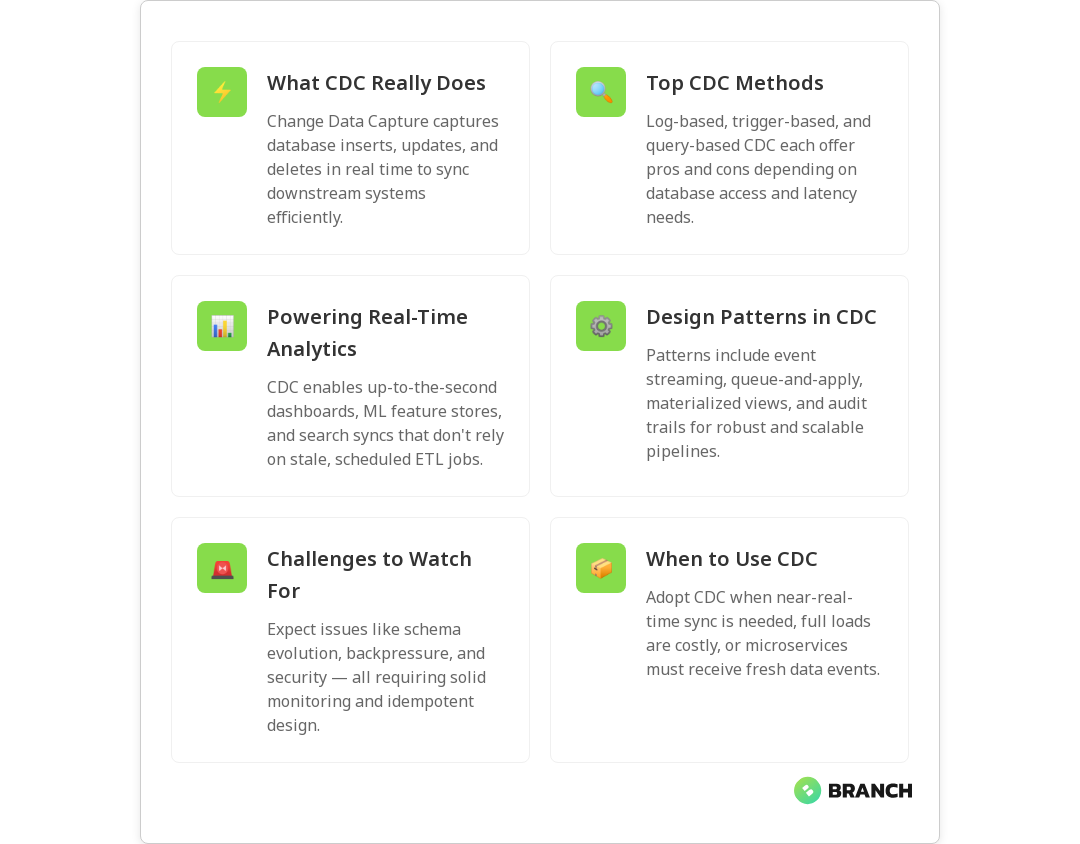

Batch jobs have their place, but business moves fast. Waiting minutes or hours for ETL windows to finish means stale insights and slow product experiences. CDC captures inserts, updates, and deletes as they happen so downstream systems — analytics, search indexes, caches, and ML features — receive changes continuously. That brings lower latency, smaller data movement, and often a lower operational cost than repeatedly full-loading big tables.

When implemented correctly, CDC supports event-driven architectures and real-time analytics while minimizing impact on operational databases. For a technical primer on the common CDC approaches and their tradeoffs, see this practical overview at GeeksforGeeks.

Core CDC approaches (and when to use them)

CDC isn’t one-size-fits-all. Choose the approach that matches your database, latency needs, and ops tolerance.

- Log-based CDC — Reads database write-ahead logs (WAL, binlog, redo log). Low impact on source systems and suited for high-volume production systems. This is the most common recommendation for minimal latency and load. See an explanation of real-time change tracking at Informatica.

- Trigger-based CDC — Database triggers write changes to a shadow table. Works when you can’t access logs, but adds overhead and complexity to schema and migrations.

- Query- or timestamp-based CDC — Periodically queries for rows updated after a timestamp. Simple, but can miss edge cases or create heavier loads and higher latency.

- Hybrid approaches — Combine log-based capture with business-level change enrichment in downstream processors for auditability or complex event creation.

How CDC fits into data architecture

CDC typically sits between the operational systems and your downstream consumers. The flow looks like:

- Capture: CDC component reads change events (log/trigger/query).

- Transform: Optional enrichment, masking, or normalization.

- Transport: Publish events to a messaging layer (Kafka, Kinesis) or push directly to targets.

- Apply: Sink connectors or consumers apply changes to data warehouses, search, caches, or analytic systems.

This pattern supports event-driven apps and feeds ML feature stores with fresh data. For practical considerations when evaluating CDC tools and streaming architectures, check this guide from Data Engineering Weekly.

Read more: Data Engineering for AI – Learn why well-built data pipelines are the foundation that makes reverse ETL reliable and repeatable.Common CDC design patterns

When building CDC pipelines you’ll repeatedly use a few reliable patterns:

- Event streaming — Emit change events into Kafka/Kinesis and handle ordering, compaction, and schema evolution at the consumer layer.

- Queue-and-apply — For smaller scale, queue changes and have idempotent apply logic on sink systems.

- Materialized views — Use CDC to keep derived tables or denormalized structures updated for fast reads.

- Audit trail — Persist change history for compliance, rollback, or replaying changes into test environments.

Tooling and evaluation

There’s a growing ecosystem of CDC tools and platforms: open-source connectors (Debezium), cloud-managed CDC services, and integrated ETL/ELT products. Evaluating tools means balancing these factors: source compatibility, latency, throughput, ease of schema evolution, delivery guarantees, monitoring, and operational burden.

When assessing options, consider whether the tool supports log-based capture for your DB, how it handles schema changes, and whether it integrates with your message bus and sinks. For a point-by-point evaluation guide, read this overview from Data Engineering Weekly.

Read more: Data Engineering Services – If you need help building the warehouse models and pipelines that feed reverse ETL, this explains how we approach data engineering projects.Challenges and pitfalls to watch for

CDC simplifies many problems, but it introduces others:

- Schema evolution: Column additions, type changes, or table renames can break connectors unless you plan for versioning and compatibility.

- Backpressure and ordering: High write spikes can overwhelm pipelines; ordering guarantees vary by tool and transport layer.

- Data correctness: Capturing the change is only half the battle — reconciling eventual consistency and handling deletes requires careful design.

- Security and privacy: Sensitive data may flow through change streams; apply masking or tokenization in the transformation step.

- Operational complexity: CDC adds more moving parts — monitoring, offset management, and disaster recovery planning are essential.

Real-world use cases

CDC powers a surprising variety of business needs:

- Real-time analytics: Fresh dashboards and alerts for product and ops teams.

- Search/index sync: Keep search services and recommendation engines fresh as product or user data changes.

- Microservices integration: Broadcast events to other services without tight coupling.

- Data lake/warehouse updates: Incremental updates to analytical stores without full reloads, reducing cost and time.

- Auditing and compliance: Maintain immutable trails of changes for regulatory requirements.

For practical examples of CDC used in data lake and warehouse synchronization, see this explanation from Striim.

Read more: Tailored AI Solutions – learn how near-real-time features and RAG strategies rely on fresh, well-engineered data pipelines.CDC versus traditional ETL/ELT

CDC and ETL/ELT solve overlapping but distinct problems. Traditional ETL moves bulk data on schedules; CDC moves incremental changes continuously. ETL is simpler for full refreshes or initial migrations; CDC is better for low-latency needs and reducing load on source databases. You’ll often see hybrid architectures: CDC streams changes to a landing zone where ELT jobs perform heavier transformations.

The cloud and modern data tooling make it easy to combine both: use CDC for incremental freshness and ELT for periodic deep transformations.

Monitoring, observability, and testing

Operational CDC needs robust observability:

- Track offsets and lag so you know how far behind each sink is.

- Monitor throughput, error rates, and repeat deliveries.

- Build automated tests that simulate schema changes and verify downstream behavior.

- Log a reconciliation metric and alert on divergence thresholds.

Security, compliance, and governance

Because CDC streams operational data, it must meet the same compliance and security controls as the source systems. Consider encryption of data in flight, role-based access to change logs, and transformation-stage masking for sensitive fields. Catalog and schema registry integration will help teams understand what fields are flowing and where.

Products and documentation from established vendors outline common best practices; for an industry-level view of CDC’s role in incremental data movement and low-impact synchronization, see Matillion’s explanation.

Choosing the right time to adopt CDC

Not every organization needs immediate CDC. Consider starting CDC when:

- You need sub-minute freshness for key use cases.

- Full-table refreshes are taking too long or costing too much.

- Downstream services rely on near-real-time events or materialized views.

Start with a limited scope: one database or set of tables, with clear success metrics. Iterate and expand once you’ve proven stability and business value.

FAQ

What is change data capture?

Change Data Capture (CDC) is a set of techniques to detect and record changes (inserts, updates, deletes) in a source database, and then propagate those changes to downstream systems in an incremental, often real-time fashion. It reduces the need for full reloads and enables low-latency data flows for analytics and event-driven systems.

What is the CDC process?

The CDC process typically involves capturing changes from the source (via logs, triggers, or queries), optionally transforming or masking the events, transporting them through a messaging layer or directly to sinks, and applying those changes to downstream targets. Monitoring and reconciliation ensure accuracy.

How does change data capture work?

CDC works by observing the source for changes. Log-based CDC reads the transaction log and converts entries to events. Trigger-based CDC uses database triggers to write changes to a side table. Query-based CDC polls for rows modified since a timestamp. Captured changes are then serialized and delivered to consumers.

What are the use cases of CDC?

CDC powers use cases like real-time analytics dashboards, search and index synchronization, keeping caches fresh, feeding ML feature stores, enabling event-driven microservices, and maintaining audit trails for compliance. It’s ideal where near-real-time freshness and minimal source impact are required.

What is the difference between ETL and CDC?

ETL (Extract, Transform, Load) performs bulk or scheduled data movement and transformation, while CDC streams incremental changes continuously. ETL is suited for initial loads and heavy transformations, whereas CDC enables low-latency sync and reduces load on production systems. Many architectures use both together.

Final thoughts

CDC is a practical and powerful pattern for modern data architectures. It reduces latency, lowers data movement costs, and enables event-driven use cases — when designed with attention to schema evolution, monitoring, and security. Start small, measure the impact, and expand. And if the first CDC pipeline you build makes your product feel a little bit faster and your analytics a little bit smarter — congratulations, you’ve just given your users a tiny bit of magic.

Read more: Data Engineering Services – if you want help designing or operating CDC pipelines tailored to your business goals and compliance needs.For practical alternatives and vendor approaches to CDC, you might also find this vendor-neutral overview helpful: Striim CDC explainer, which walks through how changes flow from operational systems into analytics platforms and data lakes.