Schema changes happen. Whether a product team adds a new field, a partner renames a column, or an upstream system starts sending slightly different types, your pipelines will notice — and sometimes loudly. This article explains practical strategies to evolve schemas gracefully, so your data flows keep moving, your analytics stay accurate, and your engineers lose fewer gray hairs. You’ll learn why schema evolution matters, concrete tactics for handling changes in real time and batch pipelines, and how to operationalize detection, testing, and rollback with minimal disruption.

Why schema evolution matters (and why it’s trickier than it sounds)

Data schemas are contracts. Consumers assume fields exist with predictable types and semantics. When that contract changes without coordination, downstream jobs fail, dashboards show wrong numbers, and ML models quietly degrade. In modern architectures — where microservices, third-party feeds, and event streams mix — schema drift is inevitable.

Beyond obvious breakage, schema changes can introduce subtle risks: silent data loss when fields are removed, corrupted joins when types change, and analytic blind spots when new fields are ignored. Handling schema evolution well isn’t just about avoiding errors; it’s about keeping trust in your data platform.

Core strategies for schema evolution

There’s no single silver bullet, but several complementary strategies will dramatically reduce surprises.

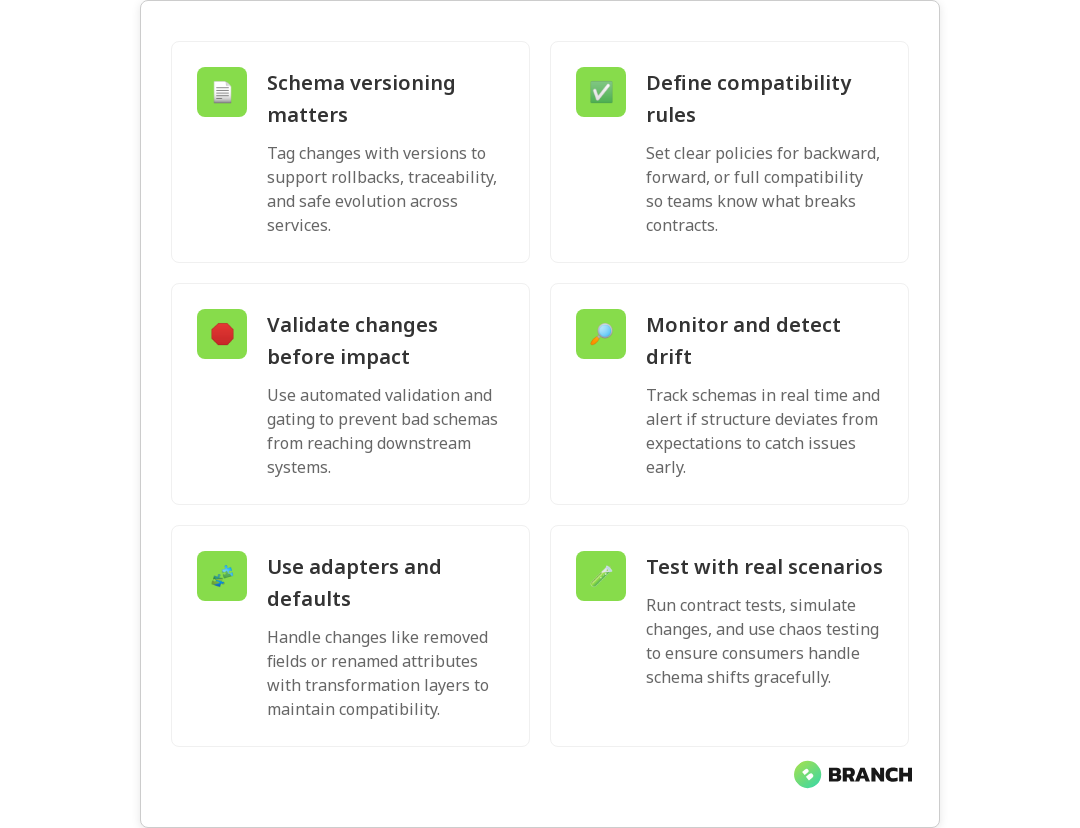

- Schema versioning — Tag schema changes with versions and allow services to negotiate or opt into a version. Versioned schemas give you a rollback path and a clear audit trail.

- Compatibility rules — Define compatibility policies (backward, forward, or full) so producers and consumers know what kinds of changes are allowed without breaking contracts. For example, adding optional fields is typically backward compatible; removing fields is not.

- Validation and gating — Validate schema changes with automated checks before they propagate. Gate deployments of producer changes until consumer teams are ready.

- Schema monitoring and drift detection — Continuously monitor incoming data for deviations from expected schemas and surface alerts early.

- Graceful defaults and adapters — When fields change or go missing, use defaults, adapters, or transformation layers to keep older consumers functioning.

For practical, modern guidance on strategies like schema versioning and real-time monitoring, the DASCA guide on managing schema evolution provides a helpful overview and rules of thumb.

Read more: AI Solutions Backbone – useful background on why robust data engineering and schema practices are essential for AI and analytics.Pattern-by-pattern: What to do when schemas change

1. Additive changes (safe and easy)

Adding new optional fields is the least disruptive change. Consumers that ignore unknown fields continue to work. To take advantage of new fields, implement gradual rollout and update consumers to read the new attributes when ready.

2. Field type changes (risky)

Changing a field’s type (e.g., integer -> string) can break parsing logic and joins. Strategies:

- Introduce a new field with the new type and deprecate the old one.

- Use serializers that support union types or nullable variants.

- Apply transformation layers that cast or normalize types at the ingestion boundary.

3. Field renaming

Renaming is destructive unless handled carefully. Best practice is to write both the old and new field names for a transition period and mark one as deprecated. This dual-write approach gives consumers time to migrate.

4. Field removal and deprecation

Never yank fields. Deprecate first, announce timelines, and remove only after consumers confirm migration. For external contracts, publish a deprecation policy and versioned changelog.

Real-time pipelines: extra considerations

Real-time systems amplify schema issues because there’s less room for human intervention. Event streams and CDC flows must handle evolving schemas gracefully.

- Additive-first approach: Favor changes that are additive and non-breaking. The Estuary blog on real-time schema evolution highlights how additive changes and explicit deprecation are essential for stream safety.

- Schema registry: Use a registry (with compatibility checks) to enforce rules and provide centralized access to schemas.

- On-the-fly adapters: Implement transformation services close to the source. These can coerce types, map names, and enrich records so downstream consumers see a stable interface.

Operationalizing schema evolution: tests, monitoring, and rollbacks

Operational readiness wins the day. Implement these practical steps:

- Pre-deployment checks: Run schema compatibility tests in CI that simulate consumer behavior. Validate type changes, optionality, and required fields.

- Automated contract tests: Producers and consumers should share contract tests that fail fast when compatibility is violated.

- Deploy slowly: Canary the producer change to a subset of topics or partitions and monitor downstream failure rates.

- Monitoring and alerts: Track schema drift metrics and parser errors. The Matia post on resilient pipelines emphasizes schema drift detection and automated error handling as core practices.

- Rollback plans: Every schema change must have a tested rollback path: version switch, adapter toggles, or producer reversion.

Testing strategies that actually catch issues

Testing schema changes across disparate systems requires creativity.

- Contract tests: Run producer and consumer contract checks in CI using sample payloads for each schema version.

- Integration test harness: Use lightweight environments with the real serializer/deserializer to validate end-to-end behavior.

- Chaos testing for schemas: Intentionally inject slight schema variations in staging and verify that consumers either handle them gracefully or fail with clear, actionable errors.

- Schema compatibility matrix: Maintain a matrix showing which consumer versions are compatible with which producer schema versions — it’s like a compatibility spreadsheet but less boring when it saves your dashboard.

Common challenges and how to overcome them

Communication gaps

Engineering teams often operate in silos. Create a lightweight governance process: a short change announcement, owners, and a mandatory compatibility check before merging.

Legacy consumers

Older jobs that can’t be updated quickly are a headache. Provide temporary adapters or a transformation layer in the ingestion pipeline to keep these consumers functional while you migrate them.

Schema registry sprawl

Multiple registries or inconsistent metadata lead to confusion. Centralize schemas and enforce a single source of truth, or at least a synchronized, documented mapping.

Where automation helps most

Automation reduces human error and speeds response:

- Automatic validation in CI/CD

- Automated schema drift detection with alerting

- Auto-generated migration scripts for common changes (e.g., field renames)

- Self-service tooling for teams to preview how changes affect downstream consumers

Tools and automation are powerful, but they need good governance and observability to be effective.

Trends and future-proofing

Look for these trends as you plan long-term strategies:

- Schema-aware systems: More platforms expose schema metadata natively to make evolution safer.

- Standardized registries: Open, centralized schema registries with strong compatibility rules are becoming a default for serious data teams.

- Automated compatibility analysis: ML-assisted tools will soon help predict breaking changes and suggest migration paths.

Adopting these trends early, in a measured way, reduces future technical debt and makes data teams more resilient.

FAQ

What is meant by data pipeline?

A data pipeline is a set of processes that move and transform data from sources (databases, logs, sensors) to destinations (warehouses, analytics, ML). It’s like a conveyor belt that also inspects and packages data along the way.

How do I build a data pipeline?

Building a pipeline starts with defining sources, outputs, and transformations. Key steps include ingestion, schema validation, transformations, monitoring, and governance. Data engineering services can help design and implement robust architectures.

What is a real-time data pipeline?

A real-time pipeline processes events with minimal latency using streams, brokers, and stream processors. It powers dashboards, personalization, and alerting. Schema changes in real-time systems require extra safeguards for stability.

What are the main 3 stages in a data pipeline?

The three stages are: ingestion (collecting data), processing/transformation (cleaning, enriching), and storage/consumption (warehouses, APIs). Each stage must be schema-aware to maintain consistency.

What is the first step of a data pipeline?

The first step is identifying and connecting to data sources. This includes understanding schema, volume, and frequency, which ensures a stable design for ingestion and downstream processing.

Schema evolution doesn’t have to be scary. With versioning, compatibility rules, registries, and good operational hygiene, you can keep your pipelines resilient and your teams less stressed. When in doubt: add a version, communicate early, and automate the boring checks — your future self (and your dashboards) will thank you.

For detailed, practical advice on managing schema evolution in pipelines, see the DASCA guide, Estuary’s take on real-time evolution, and Matia’s piece on drift detection and resilience.