Data architecture terms have a way of multiplying like rabbits at a tech conference: data lakehouse, data warehouse, data mesh, data fabric… it’s a lot. If you’ve ever wondered whether “data fabric” is a buzzword or a practical approach that will actually make your life easier, you’re in the right place. This article explains what a data fabric is, how it works, and how it differs from data mesh — plus when you might want one, the other, or both. You’ll walk away with a clear mental model and practical next steps for your organization.

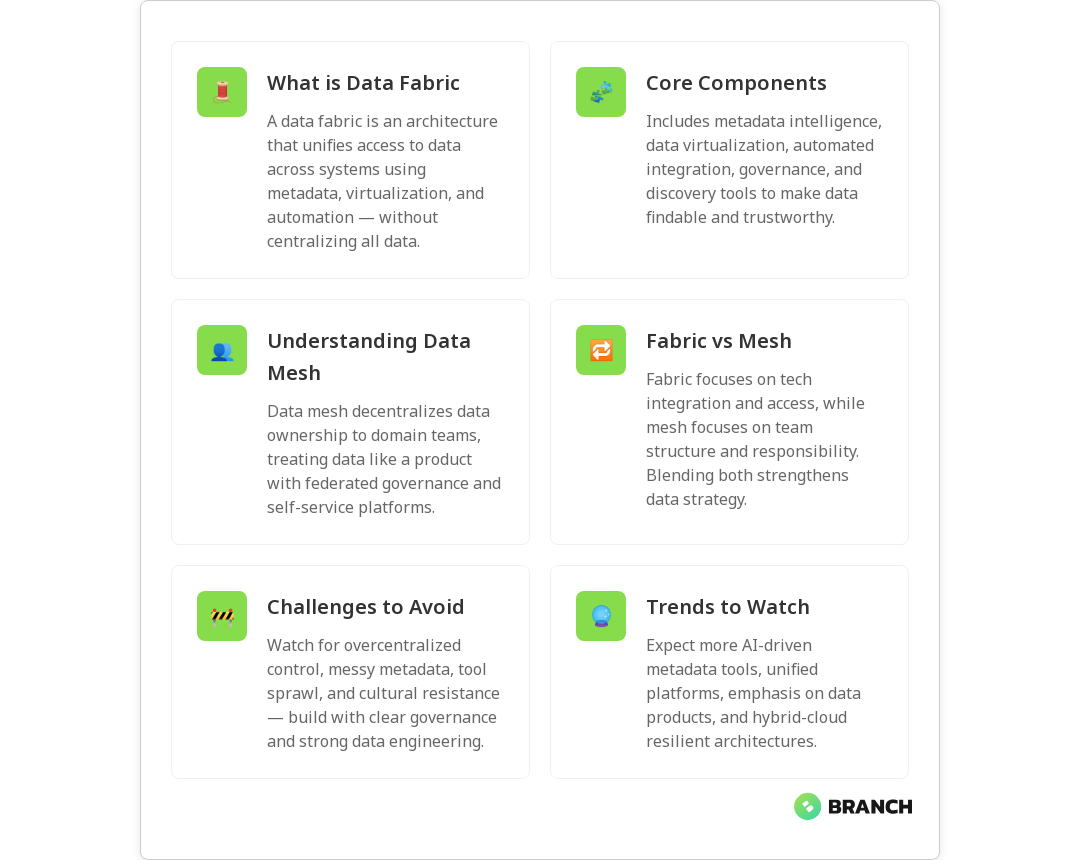

What is data fabric?

At its core, a data fabric is an architectural approach and set of technologies designed to make data available, discoverable, and usable across an organization’s hybrid environment — without moving everything into one monolithic store. Think of it like the fabric in a smart wardrobe: it stitches together disparate data sources, provides metadata-driven context, and offers unified access patterns so applications and analysts find the data they need quickly.

Rather than being a single product you install, data fabric is a layered solution that uses metadata management, data virtualization, catalogs, lineage, and automation to present a logical, connected view of data. Vendors and consultants often package pieces of this, but the idea is consistent: reduce friction and increase trust with an intelligent integration layer.

For a practical overview of how data fabric fits among other modern architectures, see IBM’s comparison of lakehouse, fabric, and mesh.

Key components of a data fabric

- Metadata-driven intelligence: Metadata is the fabric’s thread — catalogs, semantic tags, business glossaries, and automated classification create the context that makes data usable.

- Data virtualization: Present data from many sources through a unified API or layer so consumers can query it without physically copying it into a single store.

- Automated data integration: Pipelines, change-data-capture, and smart connectors to keep the fabric aware of updates across systems.

- Governance & lineage: Built-in policies, auditing, and lineage tracking so analysts can trust the data and auditors can sleep peacefully.

- Discovery and marketplaces: Catalogs and data marketplaces let users find, understand, and request access to datasets.

Alation’s practical guide to what a data fabric is offers a good primer on how catalogs and governance fit into this picture.

What is data mesh — a quick companion primer

While data fabric emphasizes a technology-led integration layer, data mesh focuses on organizational change. Data mesh proposes domain ownership of data: teams that understand a product or business domain own the datasets as products. Its core principles are domain-oriented data ownership, data as a product, self-serve data platforms, and federated governance. The goal is to reduce bottlenecks at centralized teams and enable scale through clear responsibilities.

Data mesh is more about people and processes; data fabric is more about plumbing and automation. But — and this is important — they are not mutually exclusive. Many organizations blend technical fabrics with mesh-inspired governance to get the best of both worlds. Booz Allen has a thoughtful discussion about using both approaches together.

How data fabric and data mesh differ (and where they overlap)

- Primary focus: Data fabric = integration and metadata-driven access. Data mesh = organizational design and domain ownership.

- Governance style: Fabric often leans toward centralized enforcement of policies via platform capabilities; mesh favors federated governance with domain-defined standards.

- Implementation path: Fabric implementations typically start by cataloging and virtualizing data, while mesh often begins with piloting domain data products and scaling autonomous teams.

- Speed vs. autonomy: Fabric can speed cross-team access quickly by reducing data movement; mesh gives domains autonomy and responsibility, which can increase ownership but requires cultural change.

- Complementary strengths: A fabric can provide the technical substrate (catalog, lineage, security) that helps mesh domains operate reliably. Conversely, mesh practices make fabric-delivered data products more meaningful and trustworthy.

IBM’s deeper dive into augmented data management explains how a metadata-driven fabric can support hybrid environments and even accelerate a mesh adoption when paired with automation.

IBM on augmented data management

When to choose data fabric, data mesh, or both

There’s no one-size-fits-all answer, but here are pragmatic guidelines:

- Choose data fabric when: You need rapid, secure access to distributed data sources across cloud and on-prem systems, and you want to reduce data duplication. Fabric excels where integration and metadata consolidation are the biggest bottlenecks.

- Choose data mesh when: Your organization is large, domains have specialized knowledge, and you want to scale ownership and responsibility. Mesh is about governance by domain and treating data as a product.

- Choose both when: You need a robust technical layer to support decentralized teams. Fabric supplies the discovery, lineage, and access mechanisms that let mesh-aligned domains publish reliable data products efficiently.

Practical example: Imagine a retail company with separate teams for online sales, stores, and supply chain. A fabric can expose unified views of inventory and sales across systems. A mesh approach can let each domain own and maintain its dataset as a product (store sales dataset, online transactions dataset), while the fabric ensures those datasets are discoverable and accessible enterprise-wide.

Operational benefits of combining both: reduced time to insight, stronger data quality, clearer ownership, and a self-serve experience for analytics teams.

Common challenges and how to avoid them

- Overcentralizing governance: If fabric teams try to control every detail, mesh benefits evaporate. Align governance around standards and guardrails, not micromanagement.

- Poor metadata hygiene: Fabric depends on accurate metadata. Invest in cataloging, lineage, and automated metadata capture from day one.

- Tool sprawl: Don’t bolt on too many point products. Choose platforms that integrate well and can automate routine tasks like discovery and lineage capture.

- Organizational resistance: Data mesh requires cultural change. Start with pilot domains, provide clear incentives, and pair domain teams with platform engineers to reduce friction.

Trends to watch

- Automation and AI for metadata: Automated tagging, classification, and semantic enrichment of datasets are maturing and will make fabrics smarter and easier to maintain.

- Convergence of platforms: Expect platforms that combine cataloging, virtualization, governance, and pipeline automation — reducing integration overhead.

- Focus on data products: The “data as a product” concept is gaining mainstream traction, which means fabrics will need to support strong SLAs and discoverability for curated datasets.

- Hybrid-cloud support: As businesses keep operating across clouds and on-prem systems, fabrics that handle hybrid environments seamlessly will be strategic.

Note: Many consultancies and solution providers recommend a pragmatic mix. Datalere, for example, outlines how a unified architecture that leverages the strengths of both frameworks reduces duplication and improves collaboration across domains.

How this ties into practical work your team might already do

If your organization is building AI features, powering analytics, or building custom software that relies on trustworthy data, the foundations of a fabric — cataloging, lineage, access controls — are directly useful. Strong data engineering practices are essential for either fabric or mesh approaches. If you want to learn more about designing data infrastructure that powers AI, check out this deeper look at data engineering as the backbone of AI solutions.

Likewise, if you’re evaluating vendor support for data pipelines and governance, a clear data engineering services partner can accelerate building either fabric capabilities or a self-serve platform that supports mesh teams.

If your roadmap includes AI pilots or retrieval-augmented generation (RAG) — where consistent, high-quality data is essential — tailoring AI solutions to your data strategy will be critical. Consider pairing data architecture decisions with tailored AI planning.

Finally, most fabrics run best on a resilient cloud infrastructure that supports hybrid connectivity, security, and performance tuning. If moving or integrating systems to the cloud is part of the plan, review cloud infrastructure options early.

FAQ

What is data fabric for dummies?

Data fabric is a smart layer that connects and organizes an organization’s data — across clouds, databases, and apps — so people and systems can find and use it without worrying about where it lives. It uses catalogs, metadata, and virtual access to present a unified view.

What is the difference between data fabric and data mesh?

Data fabric is a technology-led approach focused on integration, metadata, and unified access. Data mesh is an organizational model that decentralizes ownership and treats domain datasets as products. They complement each other when combined.

What is the difference between ETL and data fabric?

ETL (Extract, Transform, Load) is a process to move and transform data. Data fabric is a broader architecture that includes ETL alongside metadata management, virtualization, discovery, and governance to create an enterprise-wide data layer.

What is the difference between data lakehouse and data fabric?

A data lakehouse is a storage architecture that blends flexibility of data lakes with structured analytics of warehouses. A data fabric is an integration and access layer that sits on top of multiple storage systems — including lakehouses.

What are the advantages of data fabric?

Data fabric provides faster discovery, reduced duplication, unified governance, better support for hybrid environments, and improved self-serve analytics. When paired with clear ownership (via mesh practices), it accelerates trusted data use.

Ready to make data less of a guessing game? Whether you’re thinking about fabric, mesh, or a hybrid approach, the right combination of technology and organizational change will help you turn scattered data into reliable business outcomes — and free up your teams to do the interesting work, not the plumbing.