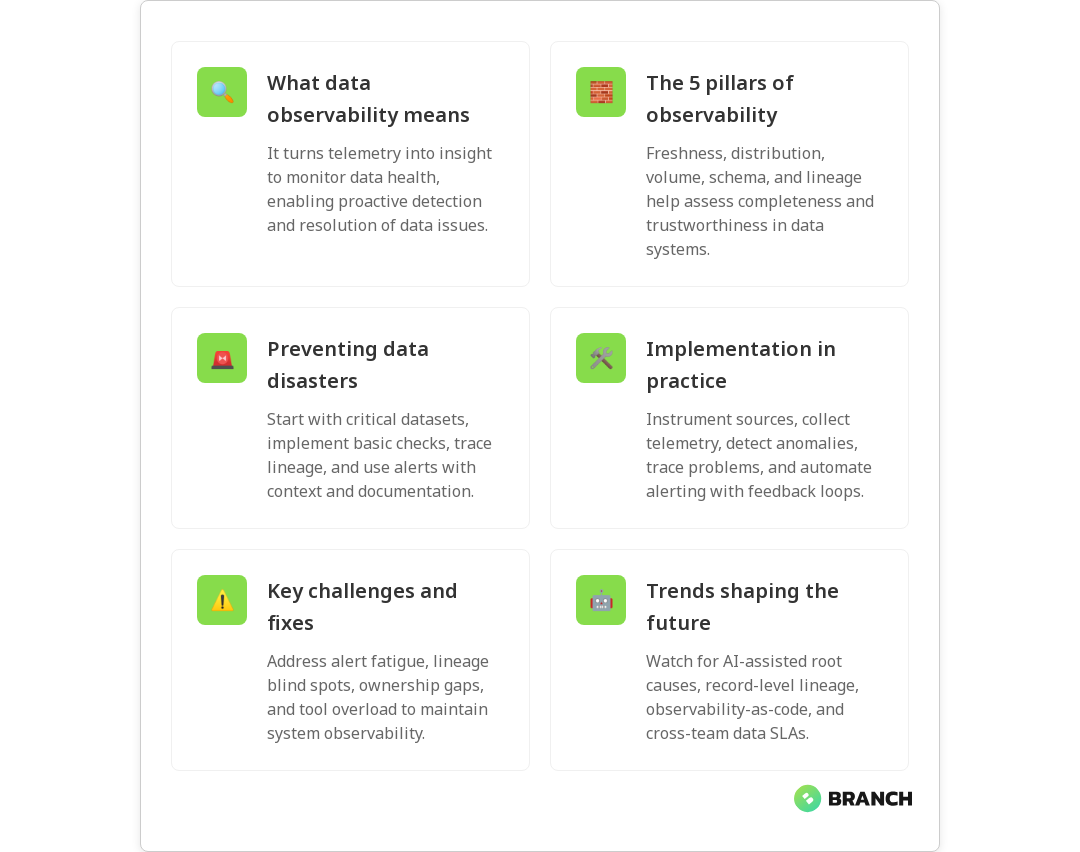

Data observability is the practice of understanding the internal health of your data systems by monitoring their signals — think of it as the health tracker for your data pipelines. It matters because as businesses scale, small data issues become big business headaches: wrong reports, broken ML models, and decisions based on garbage. In this article you’ll learn what data observability actually means, the practical pillars teams rely on, how to implement it without sending your engineers into alert-fatigue, and how it protects you from full-on data disasters.

What is data observability?

At its core, data observability is about turning signals from your data ecosystem into meaningful insights. Rather than reacting when someone spots a bad dashboard, observability helps you detect anomalies, trace problems to their root cause, and recover faster. It’s closely related to monitoring, but broader — focusing on the health of data as a product (completeness, freshness, distribution, volume, and lineage) rather than just system uptime. For a good overview of the concept and its pillars, see the primer from IBM on data observability.

Observability borrows ideas from software observability (metrics, logs, traces) and applies them specifically to data: telemetry about tables, pipelines, schemas, and model inputs. That’s why engineering, analytics, and product teams often collaborate closely when building observability into the data stack. If you want the deep-dive on pillars and lineage, Splunk’s guide is a useful read: Splunk on data observability.

Why data observability matters — and what’s at risk

Imagine a pricing algorithm that suddenly gets stale input data, or a marketing dashboard using an incomplete customer table. Those aren’t theoretical problems — they impact revenue, trust, and operational speed. Data observability helps you catch signs of data sickness early: increased nulls, skewed distributions, missing daily loads, or unexpected schema changes.

When observability is absent, teams spend a lot of time firefighting: chasing where an error started, validating assumptions, or rolling back models. With observability, incident detection, diagnosis, and resolution become proactive and measured — reducing time-to-repair and preventing knock-on issues. IBM explains how observability supports incident diagnosis and system health monitoring, which is central to preventing serious outages: IBM’s explanation.

The pillars (and a practical way to think about them)

Different vendors and thought leaders phrase pillars differently — some list five, some four — but they converge on the same practical needs. Monte Carlo and other modern analyses emphasize pillars like freshness, volume, distribution, schema, and lineage. Here’s a practical breakdown you can use when planning:

- Freshness and availability: Is the data arriving on time? Missing daily loads or delays are often the first sign of trouble.

- Quality and distribution: Are values within expected ranges? Are nulls or outliers spiking?

- Volume and cardinality: Sudden drops or surges in row counts or unique keys often indicate upstream failures or logic bugs.

- Schema and structure: Are new columns appearing or types changing unexpectedly?

- Lineage and traceability: Can you follow a faulty record back through the pipeline to the source system?

Splunk outlines how lineage and real-time monitoring together enable faster root-cause analysis and predictive detection: Splunk’s guide. Monte Carlo also emphasizes AI-powered anomaly detection and automated root-cause analysis as emerging best practices: Monte Carlo’s perspective.

How data observability works — in plain engineering terms

Implementing observability is a mix of instrumentation, automation, and team processes.

- Instrument your sources: Emit metrics for pipeline runs, table row counts, schema hashes, and load durations. These are your raw signals.

- Collect telemetry: Aggregate logs, metrics, and record-level metadata into a central place so you can correlate signals across systems.

- Detect anomalies: Use rule-based checks and machine learning models to flag deviations from expected behavior.

- Trace lineage: Map how data moves through ETL jobs, transformations, and downstream models so you can follow an issue to its origin.

- Automate alerts and runbooks: Send actionable alerts with context (what changed, recent runs, sample bad records) and link to runbooks for triage.

- Feedback loop: Capture incident outcomes to refine checks and reduce false positives over time.

For how observability supports disaster recovery through metrics, logs, and traces, see this practical explanation from Milvus: Milvus on disaster recovery.

Strategies to prevent data disasters (step-by-step)

Preventing data disasters is less about expensive tools and more about smart priorities and repeatable practices. Here’s a pragmatic roadmap your team can use:

- Inventory and classification: Know what datasets you have, where they’re used, and which are business-critical.

- Implement lightweight checks: Start with row counts, null rates, and freshness checks on critical tables. Expand later.

- Establish lineage: Use metadata tools to map dependencies so you can answer “what will break if this table fails?” quickly.

- Contextual alerts: Send alerts that include recent metrics, sample records, and links to dashboards and runbooks.

- On-call practices: Rotate ownership, document runbooks, and review incidents to prevent recurrence.

- Use ML where it helps: Anomaly detection can reduce noise by prioritizing the most suspicious deviations, but start with deterministic checks before layering ML.

Common challenges and how to overcome them

Teams run into a handful of repeatable obstacles when building observability:

- Alert fatigue: Too many noisy checks create false alarms. Fix by tuning thresholds, batching similar anomalies, and prioritizing critical datasets.

- Blind spots in lineage: Without accurate lineage, root-cause analysis stalls. Invest in metadata capture and automated lineage tracing where possible.

- Ownership ambiguity: If no one owns a dataset, it’s unlikely to be observed well. Assign data owners and make SLAs explicit.

- Tool sprawl: Multiple monitoring tools with fragmented signals slow diagnosis. Consolidate telemetry or integrate tools to provide a single pane of view.

Collibra’s take on maintaining data system health stresses monitoring, tracking, and proactive troubleshooting as key activities — essentially the antidote to these challenges: Collibra on defining data observability.

Tools and automation — what to look for

The market has specialized observability platforms, pipeline-focused tools, and general-purpose monitoring systems. When evaluating tools, prioritize:

- Automated lineage and easy integration with your data stack.

- Flexible rules and built-in anomaly detection (with explainability).

- Actionable alert context (sample bad records, diffs, and recent job runs).

- Good metadata management and collaboration features so analysts and engineers can share context.

Monte Carlo and Splunk both highlight automation and predictive analytics as growing trends; automation reduces mean-time-to-detect and mean-time-to-repair, and predictive signals help avoid incidents before they escalate: Monte Carlo and Splunk.

Trends to watch

Watch for these evolving trends in data observability:

- AI-assisted root cause analysis: Tools are getting better at suggesting the most probable causes and the minimal set of failing components.

- Record-level lineage: Tracing not just tables but individual records through transformations is becoming more feasible and valuable for debugging.

- Observability-as-code: Defining checks, alerts, and SLAs in version-controlled pipelines to keep observability reproducible and auditable.

- Cross-team SLAs: Product, analytics, and engineering teams formalize dataset contracts so ownership is clear and expectations are aligned.

FAQ

What is meant by data observability?

Data observability is the practice of collecting telemetry (metrics, logs, metadata) from pipelines and systems to monitor health, detect anomalies, trace lineage, and resolve issues quickly. It treats data as a product with a focus on availability, quality, and traceability. IBM on data observability

What are the 4 pillars of data observability?

Common pillars include freshness, distribution (quality), volume, and schema. Many frameworks add lineage as a fifth pillar for tracing the origin of issues. Splunk’s pillars

What are the use cases of data observability?

Use cases include early detection of ingestion failures, preventing bad data from reaching dashboards, faster root-cause analysis, automated alerting, and improved confidence in ML outputs. It also supports disaster recovery. Milvus on disaster recovery

What is the difference between data observability and data monitoring?

Monitoring uses predefined checks and dashboards to confirm uptime or thresholds. Observability is broader: it leverages signals (metrics, logs, metadata) to understand behavior and diagnose new, unseen issues without extra instrumentation.

How does data observability work?

It works by instrumenting data flows to emit telemetry (counts, schemas, runtimes), collecting it centrally, applying anomaly detection, and mapping lineage for traceability. Alerts and runbooks speed resolution. Tools like Monte Carlo and Collibra provide practical implementations.

Closing thoughts

Data observability isn’t a magic wand, but it’s one of the highest-leverage investments a data-driven organization can make. It reduces downtime, protects revenue and reputation, and returns time to engineers and analysts who would otherwise be stuck in perpetual triage. Start small, focus on critical datasets, and build processes around the signals your systems provide — you’ll avoid data disasters and sleep a little easier at night.