DataOps sounds a little like a trendy gym for data pipelines — and in a way it is: disciplined, repeatable, and focused on measurable improvement. But it’s more than a buzzword. DataOps brings engineering rigor, automation, and cross-team collaboration to the messy world of data so businesses can deliver reliable insights faster. In this article you’ll learn what DataOps actually means, the core principles and components that make it work, practical ways it increases productivity, and a roadmap to get started without tearing down the house.

Why DataOps matters right now

Companies are drowning in data but starving for trustworthy insights. Traditional data projects can be slow, error-prone, and siloed: engineers build pipelines, analysts complain about data quality, and stakeholders wait months for reports that are already stale. DataOps addresses those frictions by applying software engineering practices — automation, CI/CD, testing, and collaboration — to the data lifecycle. The result is faster delivery of analytics, fewer surprises, and teams that can iterate on data products with confidence.

For an overview of how organizations are defining and adopting DataOps, see this practical primer from IBM on applying automation and collaborative workflows across data teams.

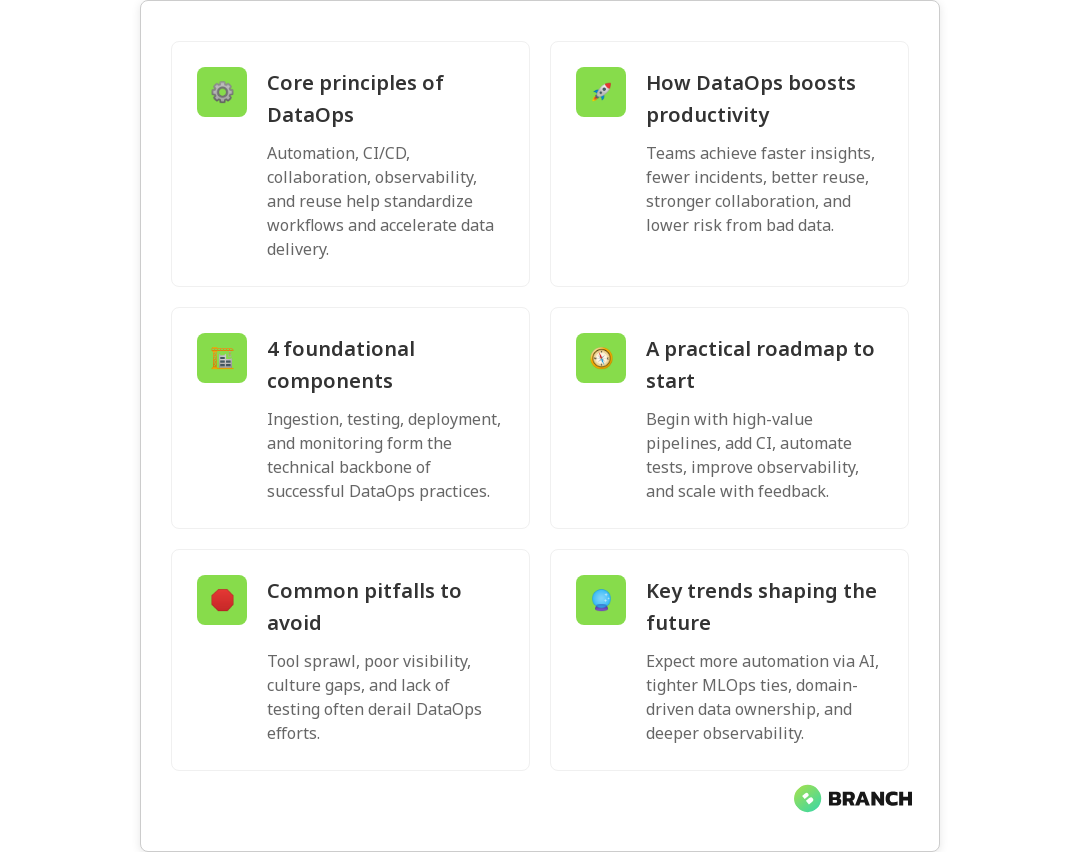

Core principles of DataOps

DataOps isn’t a checklist you mark off once; it’s a cultural and technical shift. Leaders in the field highlight a few shared principles:

- Automation: Remove repetitive manual steps with pipelines, testing, and deployment automation to reduce human error.

- Collaboration: Break down silos between data engineers, analysts, operations, and business stakeholders so everyone shares ownership of outcomes.

- Continuous delivery and integration: Apply CI/CD for data and analytics so changes reach production quickly and safely.

- Monitoring and observability: Treat data pipelines like software systems — instrument them to detect anomalies, performance issues, and data drift.

- Reusable assets: Build shared data assets, templates, and modules to speed development and standardize quality.

Alation summarizes these ideas well and connects them to practical processes for making analytics repeatable and reliable; their piece on defining DataOps is a useful read for teams shaping policy and tooling (Alation).

The four primary components of DataOps

Different experts phrase components slightly differently, but most agree on four pillars that operationalize the principles above:

- Data ingestion and orchestration: Reliable, scheduled, and event-driven pipelines that move data from sources to storage and processing systems.

- Data quality and testing: Automated validation, schema checks, and unit/integration tests to ensure accuracy before data reaches consumers.

- Deployment and CI/CD: Version-controlled transformations and automated deployments for analytics assets and pipelines.

- Monitoring and feedback loops: End-to-end observability with alerts, lineage, and feedback channels so problems are found and fixed quickly.

In practice, these components are implemented with a mix of engineering skills, cloud services, and governance — and when they’re stitched together thoughtfully, productivity leaps. Splunk’s overview of DataOps gives a pragmatic view of pipeline orchestration and observability practices that help teams scale (Splunk).

How DataOps transforms productivity — concrete benefits

“Productivity” for a data team isn’t just lines of code — it’s faster delivery of reliable answers that stakeholders can trust. Here’s how DataOps boosts that productivity in measurable ways:

- Faster time-to-insight: Automated pipelines and deployment mean analysts and product teams get access to up-to-date data sooner.

- Less firefighting: Monitoring and alerting reduce time spent on surprises and emergency fixes so engineers can focus on improvements.

- Higher reuse and consistency: Shared modules and templates cut duplicate work and speed onboarding for new team members.

- Better collaboration: Clear ownership, shared processes, and cross-functional reviews reduce handoff friction between teams.

- Reduced risk: Tests and approvals in CI/CD reduce the chance that a broken pipeline or bad data propagates to reports or ML models.

In short: fewer interruptions, faster releases, and more predictable outcomes. Informatica frames this as systems thinking across the data lifecycle, which aligns stakeholders and simplifies delivery (Informatica).

Practical roadmap: how to implement DataOps

Implementing DataOps doesn’t require you to rip out your stack overnight. Follow a pragmatic, phased approach:

- Map your value streams: Identify the highest-value pipelines (reporting, ML features, billing data) and target them first.

- Introduce source control and CI: Store transformations and pipeline definitions in version control and add automated tests and build pipelines.

- Automate tests: Start with schema and regression tests, then expand to data quality and performance tests.

- Instrument end-to-end observability: Add lineage, metrics, and alerts so teams can detect problems early and measure SLAs.

- Standardize and reuse: Create libraries, templates, and documentation to reduce ad hoc work and accelerate new pipelines.

- Iterate and expand: Use feedback from the initial projects to adapt processes and scale across domains.

It helps to pair technical changes with cultural shifts: regular standups between engineering and analytics, blameless postmortems, and clear SLAs for data availability. Industry coverage suggests a move toward unified, domain-aware DataOps as teams decentralize responsibilities while keeping shared standards (DBTA).

Common challenges and how to avoid them

DataOps sounds great — but it’s not magic. Teams often stumble on a few recurring issues:

- Tool sprawl: Too many disparate tools can make automation and governance harder.

- Incomplete observability: If you can’t see data lineage or latency, you can’t fix the right problem.

- Cultural resistance: Without buy-in from analysts and business stakeholders, DataOps becomes an engineering-only initiative.

- Underinvesting in tests: Teams that treat tests as optional will see data regressions slip into production.

Address these by consolidating around a few flexible, well-integrated tools; documenting ownership and SLAs; and treating DataOps as a product that serves users, not just a platform engineers maintain.

Trends to watch

DataOps continues to evolve. Watch for these trends that will shape productivity gains in the next few years:

- Domain-oriented DataOps: Teams decentralize data ownership by domain while preserving enterprise standards.

- Increased automation with AI: Automated anomaly detection, data cataloging, and test generation reduce manual overhead.

- Tighter integration with ML lifecycle: DataOps practices will more closely align with MLOps to ensure models get reliable, versioned data.

- Stronger emphasis on observability: Tooling that provides lineage, drift detection, and SLA monitoring becomes standard practice.

As DataOps matures, it becomes less about a set of tactics and more about a repeatable operating model that lets data teams deliver value predictably. For organizations building AI and analytics, DataOps is no longer optional — it’s foundational.

FAQ

What is meant by DataOps?

DataOps is a set of practices and cultural values that apply software engineering principles — automation, testing, CI/CD, and collaboration — to the data lifecycle. It enables faster delivery of reliable, high-quality data and analytics by treating pipelines like software products.

What are the key principles of DataOps?

The key principles include automation, collaboration, continuous integration and delivery, monitoring/observability, and reuse of data assets. These practices reduce manual effort, improve quality, and accelerate insights delivery.

What are the four primary components of DataOps?

The four primary components often cited are data ingestion and orchestration, data quality and testing, deployment and CI/CD for analytics assets, and monitoring with feedback loops and lineage. Together they create repeatable, resilient data pipelines.

What are the benefits of DataOps?

Benefits include faster time-to-insight, fewer production issues, higher reuse of data work, improved collaboration between teams, and reduced risks from data errors. DataOps supports scalable, trustworthy analytics while freeing teams to focus on higher-value work.

How to implement DataOps?

Start by mapping high-value data flows, introduce version control and CI/CD for transformations, add automated tests for data quality, implement observability and lineage, and build reusable components. Scale from a pilot to broader adoption while aligning stakeholders around SLAs and ownership.

DataOps isn’t a silver bullet, but it is the operating model that turns data from an unpredictable resource into a dependable asset. With the right mix of engineering practices, cultural alignment, and smart tooling, teams can spend less time fixing pipelines and more time building insights that move the business forward — and that’s productivity worth cheering for.