Data orchestration is the invisible conductor that keeps data pipelines playing in harmony — and choosing the right conductor matters. Whether you’re running nightly ETL, powering ML feature stores, or wiring data for real-time analytics, the orchestrator you pick affects developer productivity, reliability, and long-term maintenance. In this article we’ll compare Apache Airflow, Prefect, and Dagster across design philosophies, developer experience, scheduling and execution models, observability, and real-world fit. By the end you’ll have practical guidance on which tool to try first and what to watch for during adoption.

Why data orchestration matters

Orchestration does more than kick off jobs at specific times. It manages dependencies, retries failures intelligently, coordinates across systems, and feeds observability into the teams that run it. As organizations scale, orchestration becomes the spine of reliable data delivery — one wrong scheduling quirk can delay reports, break models, or cause production outages.

Quick primer: What each tool brings to the table

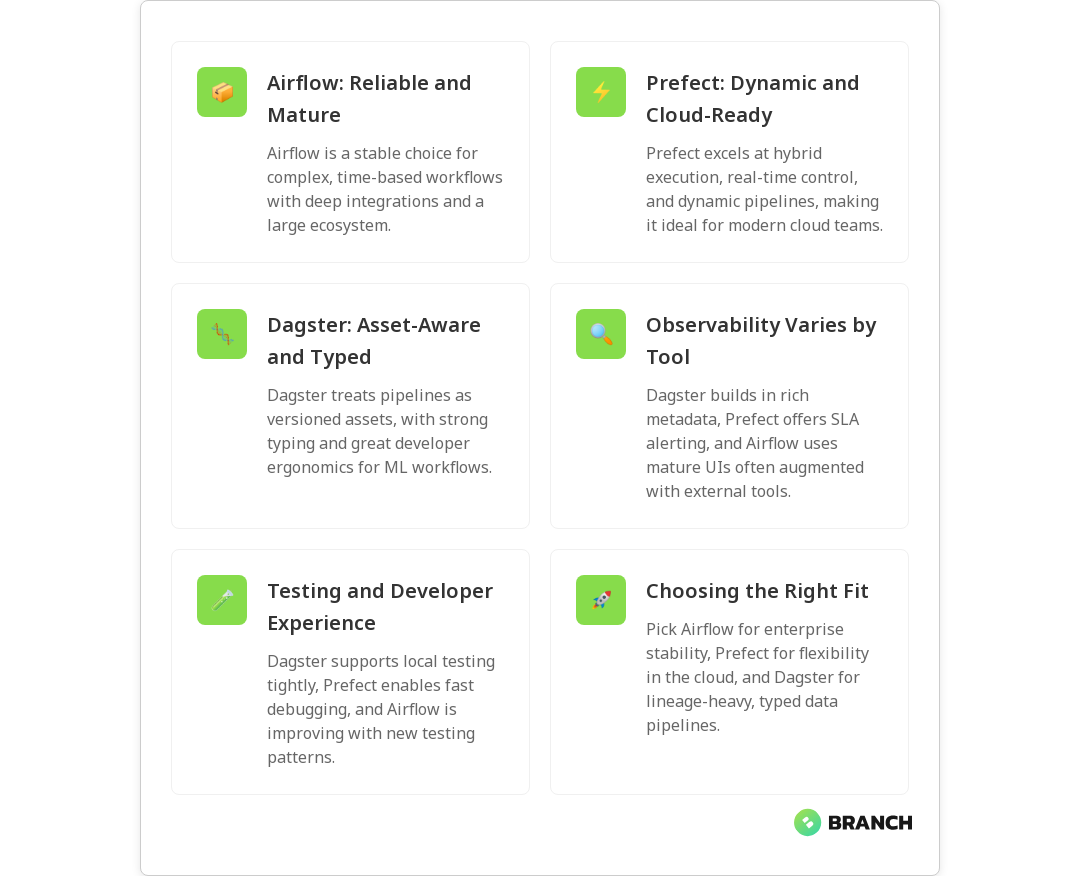

- Apache Airflow — Mature, battle-tested, and community-backed. Airflow excels at complex static DAGs, deep integration with diverse systems, and proven production deployments. It’s a safe default for heavy scheduling needs, though it can feel heavyweight for dynamic or asset-centric workflows.

- Prefect — Modern, cloud-friendly, and API-driven. Prefect emphasizes dynamic flows, hybrid execution, and runtime control (think circuit-breakers and real-time SLA alerting). It often delivers faster developer iteration for cloud-native teams and supports both local and managed control planes.

- Dagster — Developer-first and asset-aware. Dagster treats pipelines as versioned assets and focuses on strong typing, local development ergonomics, and observability for metadata. It’s a strong contender for ML pipelines and teams who want explicit tracking of data assets rather than just tasks.

These summaries align with recent comparisons that highlight Airflow’s stability, Prefect’s dynamic execution model, and Dagster’s asset-based approach (see the risingwave comparison and the getgalaxy guide).

Key differences that affect day-to-day work

1) Static DAGs vs dynamic flows vs assets

Airflow centers on DAGs defined as code — great for predictable, repeatable jobs. Prefect gives you dynamic flows where runtime decisions, mapping, and stateful control are easier. Dagster reframes pipelines around assets, which is useful when you care about the lineage and versioning of datasets and model artifacts rather than just task success.

2) Developer experience and testing

Dagster emphasizes local development and testability with a tight feedback loop, while Prefect’s Pythonic API and interactive REPL-style debugging make iteration quick. Airflow historically required more CI and operational scaffolding for testing, though newer patterns and plugins have improved the local dev story.

3) Scheduling, execution, and scaling

Airflow is strong on cron-like scheduling, backfills, and complex dependency windows. Prefect supports hybrid execution models so sensitive tasks can run on-prem while the control plane is hosted. Dagster focuses on sensible parallelism around assets and can scale with Kubernetes executors. If your use case includes heavy real-time or very high concurrency workloads, verify the execution model under load.

4) Observability and metadata

Observability is where preferences diverge. Airflow provides mature UI and logging, but teams often augment it with external monitoring. Dagster builds metadata and lineage into its core, making it easier to answer “which dataset changed?” Prefect provides runtime introspection and SLA alerting, which is handy for detecting anomalies during execution (see the practical comparisons in the zenml showdown).

When to choose each orchestrator

- Choose Airflow if you have many existing integrations, need complex time-based scheduling, or require a mature ecosystem for enterprise use. Airflow is the conservative, reliable choice for production-grade DAGs.

- Choose Prefect if you want an API-driven, cloud-friendly orchestrator that supports dynamic flows and hybrid execution. It’s great for teams building modern pipelines that need runtime control and simple orchestration for cloud services.

- Choose Dagster if you’re building ML pipelines, care deeply about asset lineage and versioning, and want a pleasant developer experience with strong local testing and typed IO.

Migration and hybrid strategies

Moving orchestrators isn’t trivial, but it’s doable with a balanced approach. Consider running both systems in parallel during a migration — keep critical DAGs in the stable orchestrator while gradually porting pipelines to the new system. Focus first on idempotent tasks and data assets that have clear inputs and outputs. Use adapters or small wrapper operators to maintain compatibility with external systems during transition.

Costs, ops, and ecosystem

Open-source and cloud-managed offerings change the total cost of ownership. Airflow distributions (Apache Airflow, Astronomer, Managed Airflow) and community operators give diverse deployment options. Prefect offers a managed cloud control plane plus an open-source core, while Dagster also has a hosted option and an opinionated open-source framework. Consider the operational skillset on your team and whether hosting, managed control planes, or vendor support match your compliance posture.

Common challenges and how to manage them

- Dependency sprawl: Large DAGs or complex asset graphs can become brittle. Break DAGs into smaller, testable units and prefer explicit asset definitions when possible.

- Observability gaps: Missing metadata makes debugging slow. Standardize logging, add lineage capture, and wire orchestration alerts into your incident channels.

- Testing pipelines: Write unit tests for task logic and integration tests for orchestration behavior. Leverage local execution modes provided by Prefect and Dagster to iterate quickly.

- Team buy-in: Migration is as much cultural as technical. Run brown-bag sessions, document patterns, and create starter templates for common pipeline types.

Trends to watch

- Asset-first orchestration is growing, especially for ML and analytics teams that need lineage and dataset versioning.

- Hybrid execution and zero-trust designs will shape how teams run sensitive tasks on-prem while using cloud control planes for coordination.

- Stronger developer ergonomics and local testing support will tilt new projects toward tools that reduce friction in iteration cycles.

FAQ

What is data orchestration vs ETL?

Data orchestration is the coordination layer that manages when, where, and how data tasks run and how their outputs flow between systems. ETL (extract, transform, load) is a specific pattern of data movement and transformation. Orchestration manages ETL jobs along with other tasks like model training, monitoring, and downstream notifications — think of ETL as a cargo train and orchestration as the railroad network and timetable.

What is the difference between data orchestration and data integration?

Data integration focuses on combining data from different sources into a coherent target (for example, a data warehouse), often handling schema mapping and transformation. Orchestration focuses on scheduling, dependency management, retries, and the logic that runs those integration tasks. Integration is about the data; orchestration is about when and how integration jobs execute.

What is the best data orchestration tool?

There’s no one-size-fits-all best tool. Airflow is often best for complex, time-based production workflows; Prefect shines for cloud-native, dynamic flows; Dagster is excellent when asset lineage and developer ergonomics matter. The best choice depends on your team’s skills, operational constraints, and the nature of your pipelines — pilot each tool with a representative workload before committing.

What is an orchestration framework?

An orchestration framework is a software system that defines, schedules, and monitors workflows. It provides APIs or DSLs for authors to define tasks and dependencies, an execution engine to run work, and UIs or APIs to visualize runs and handle failures. Frameworks may be more opinionated (asset-first) or more general-purpose (task graphs).

What is a data orchestration example?

A common example: a nightly pipeline that extracts sales data from multiple sources, transforms and aggregates it, updates a reporting table in a data warehouse, triggers model retraining if data drift is detected, and alerts stakeholders when thresholds are crossed. The orchestrator manages ordering, retries, parallelism, and notifications across those steps.