Data teams are increasingly asked to deliver trustworthy, timely data to decision-makers, and doing that at scale feels a bit like trying to herd very opinionated cats. Two concepts that help tame chaos are data contracts and data SLAs (Service Level Agreements). They sound similar, and they overlap, but they serve different roles in a healthy data ecosystem. In this article you’ll learn what each one is, how they work together, where they differ, and practical steps to implement them so your data behaves like the reliable team player it’s supposed to be.

Why this matters

As organizations rely more on analytics, machine learning, and automated workflows, the cost of bad data goes up fast — wrong decisions, failed models, and angry stakeholders. Data contracts and data SLAs are governance tools that reduce surprises. Contracts define expectations between data producers and consumers, while SLAs quantify performance and reliability. Together, they help teams move fast without leaving quality behind.

What is a data contract?

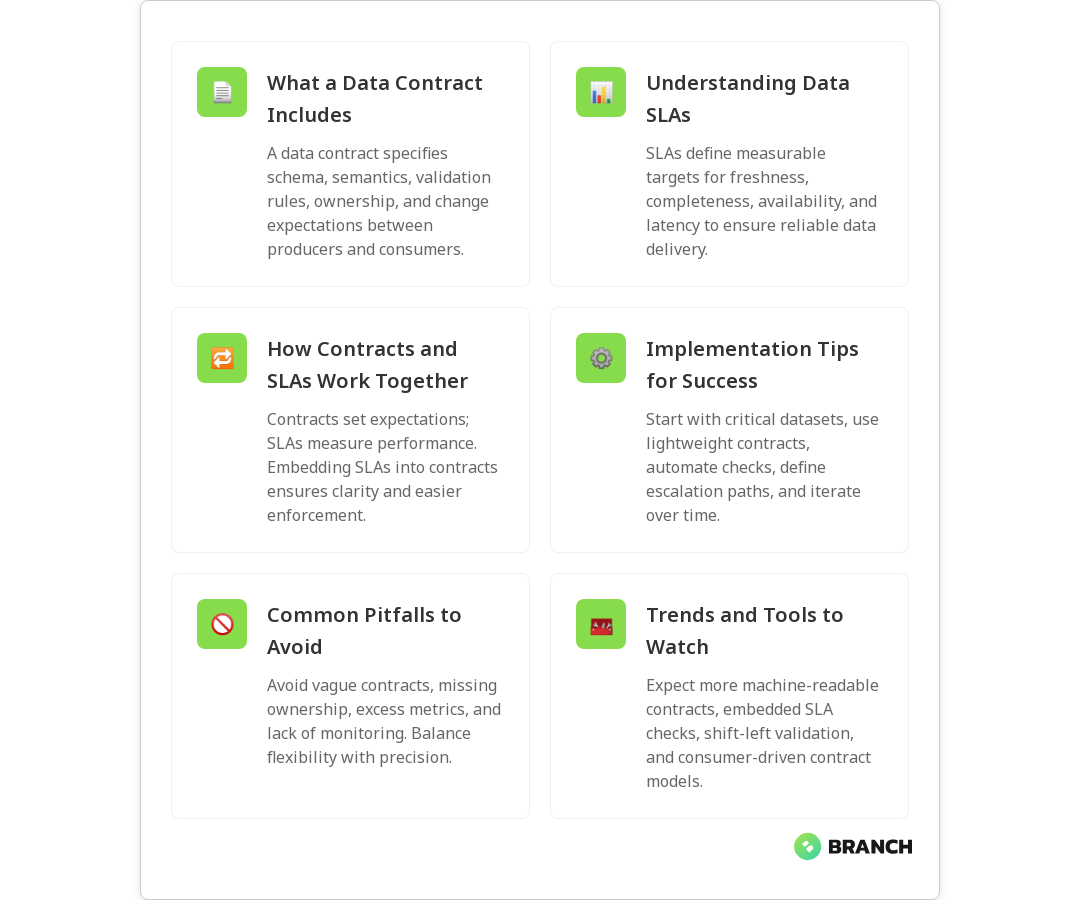

At its simplest, a data contract is an agreement — often machine-readable — between the team producing a dataset and the team consuming it. It specifies expectations such as schema, semantics, ownership, and quality rules. A good contract answers the “what” and “who”: what fields are required, what each field means, what validation to run, and who owns fixes.

Data contracts can be as lightweight as a documented schema and ownership list or as systematic as code-driven, machine-enforced specifications. For a practical walkthrough and best practices, see the Monte Carlo guide and the DataCamp primer.

Core elements of a data contract

- Schema definition (field types, nullability)

- Semantic definitions (what a column actually means)

- Validation rules (ranges, formats, referential integrity)

- Ownership and contact points

- Expectations for updates or change management

What is a data SLA?

Data SLAs quantify the level of service data must provide. Borrowing the language of ops, SLAs define measurable targets for things like freshness (how up-to-date data must be), availability (how often a dataset is accessible), completeness (what percentage of rows must be populated), and latency (how long consumers must wait for new data).

SLAs make expectations objective and testable. Instead of saying “data should be fresh,” an SLA says “95% of records must be updated within 30 minutes of the source event.” Integrating SLAs into contracts is a recommended approach; you can see examples of embedding freshness and latency guarantees in writing at DataCamp and in productized forms discussed by Alation.

Common SLA metrics

- Freshness: time since last successful update

- Availability: percent uptime or query success rate

- Completeness: proportion of expected records present

- Accuracy: error rates or anomaly counts

- Latency: processing time for streaming or batch pipelines

Data contracts vs. data SLAs — how they differ (and overlap)

People often use the terms interchangeably, but they are complementary. Think of a data contract as the playbook describing how teams should behave and an SLA as the scoreboard showing whether those behaviors meet agreed levels. Contracts set expectations (schema, ownership, validation), and SLAs put measurable thresholds on aspects like freshness and availability.

Overlap exists when contracts embed SLAs as explicit clauses. That’s a best practice: it prevents ambiguity and makes enforcement easier because the SLA lives next to the schema and validation rules.

A quick analogy

Imagine a restaurant. The data contract is the menu and kitchen rules (what ingredients, how a dish is prepared, who’s responsible). The SLA is the promise that your food will arrive within 20 minutes and be hot and complete. Both are required for a happy diner.

Strategies for implementing data contracts and SLAs

Practical rollout plans prioritize incremental adoption and automation. Here’s a pragmatic roadmap that doesn’t require a team of data lawyers:

- Identify critical datasets: start where business impact is highest (billing tables, core user events, model features).

- Draft lightweight contracts: include schema, owner, acceptable nulls, and one or two key SLAs (freshness, completeness).

- Automate checks: implement schema validation and SLA monitoring in CI/CD or data quality pipelines. Tools like dbt freshness tests or dedicated monitoring platforms help here; see implementation notes from Monte Carlo.

- Set escalation paths: define what happens when an SLA is missed — alerts, mitigation steps, and root cause ownership.

- Iterate and expand: use metrics from monitoring to refine SLAs and broaden contract coverage.

Common challenges and how to avoid them

Rolling out contracts and SLAs is not always smooth. Here are common pitfalls and practical fixes:

- Too rigid or too fuzzy: Contracts that are overly strict block change; vague contracts create confusion. Balance specificity with flexibility — use versioning and change notifications.

- No monitoring: Contracts without enforcement are aspirational. Automate tests and run SLAs against production metadata.

- Lack of ownership: If nobody owns a dataset, nobody fixes it. Assign clear owners in the contract with backup contacts.

- Metric overload: Tracking too many SLAs creates alert fatigue. Focus on a small set of high-impact metrics.

- Unclear remediation: Define triage steps and SLO-based penalties or incentives to ensure action when SLAs break.

Tools and trends to watch

The tooling landscape is evolving quickly. A few notable trends:

- Machine-readable contracts: Tools that store contracts as code (YAML/JSON) allow automated enforcement and drift detection; see the machine-readable approach discussed by Hevo Academy.

- Embedded SLA checks: Modern pipelines include freshness and completeness checks as part of deployment; many teams leverage data warehouse metadata and timestamps to validate SLAs, as noted in Monte Carlo’s warehouse guidance.

- Shift-left validation: Run schema and quality tests earlier in the pipeline to prevent bad data from reaching consumers.

- Consumer-driven contracts: Driven by downstream needs, this approach centers the contract around consumer expectations rather than just producer convenience.

Practical checklist to get started

- Choose 2–3 critical datasets and document owners and intents.

- Create a simple contract: schema, semantics, and one SLA each for freshness and completeness.

- Automate validation using your existing tools (dbt, tests, monitoring dashboards).

- Define alerting thresholds and remediation playbooks.

- Run a monthly review to tune SLAs and expand contract coverage.

FAQ

What does data quality mean?

Data quality measures how well data meets consumer needs. It includes accuracy, completeness, timeliness, validity, and consistency to ensure trust and reliability.

How do you measure data quality?

By using metrics such as completeness percentage, freshness latency, error rates, and accuracy checks. SLAs make these metrics measurable and enforceable.

What are the data quality objectives?

They are target levels for quality aligned to business needs — for example, “95% of events processed within 15 minutes.” Objectives should be specific and measurable.

What are the 7 dimensions of data quality?

The seven dimensions are accuracy, completeness, consistency, timeliness, validity, uniqueness, and integrity. They guide checks and highlight problem areas.

How do you ensure data quality?

Through governance, contracts, and SLAs. Automate validation and monitoring, assign ownership, and define remediation workflows with regular audits and feedback loops.

Conclusion

Data contracts and data SLAs each play a distinct role in ensuring data quality at scale: contracts set expectations and ownership, while SLAs make performance measurable. When you combine clear, machine-readable contracts with focused, automated SLAs, teams can scale confidently and spend less time firefighting and more time delivering insight. Start small, automate where possible, and iterate based on real-world feedback — your data consumers will thank you, and the chaos of opinionated data cats will subside (mostly).