Data quality is the unsung hero of reliable analytics, AI models, and production reporting — and when it fails, no one forgets. This article walks through three popular open-source tools for data quality testing — Great Expectations, Deequ, and Soda — so you can make a practical choice for your pipelines. You’ll get a comparison of capabilities, real-world trade-offs, deployment tips, and guidance on when to pick each tool depending on scale, team skills, and use cases.

Why data quality testing matters (and why you should care)

Bad data sneaks into systems every day: schema drift after a vendor changes a feed, null-filled records from a flaky ingest job, or subtle distribution shifts that silently poison a model. Data quality testing helps you detect and remediate these problems before they become business incidents. ThoughtWorks calls data quality “the Achilles heel of data products,” arguing that constraint-based testing and monitoring are essential parts of modern data delivery workflows (ThoughtWorks article).

At a high level, data quality testing does two things:

- Verify incoming and transformed data meet expectations (validation in development and CI/CD).

- Continuously monitor production data for regressions or anomalies (observability and alerting).

Quick introductions: Great Expectations, Deequ, and Soda

Before we dig into comparisons, here’s a brief primer on each tool so we’re all speaking the same language.

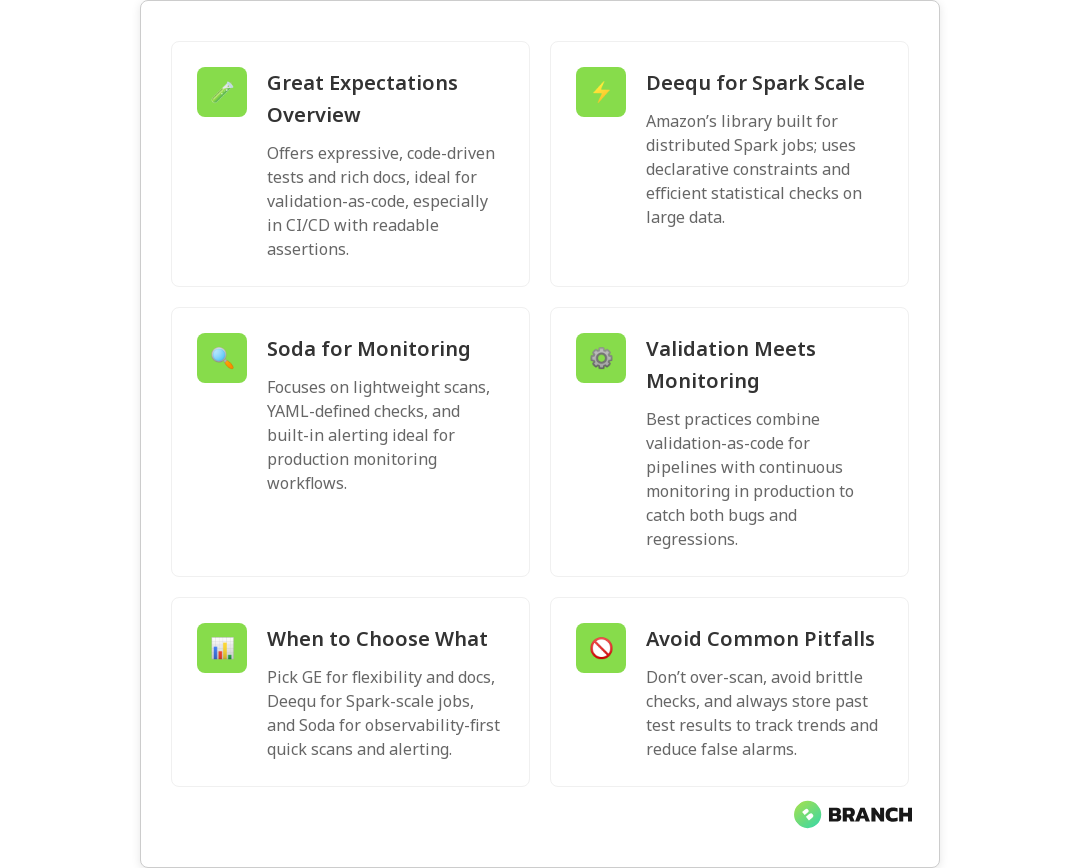

- Great Expectations (GE) — Validation-as-code focused on expressive expectations, rich documentation, and data profiling. GE emphasizes human-readable expectations and stores “data docs” that explain what checks run and why. It’s especially popular for teams that want clear assertions and documentation as part of their pipelines.

- Deequ — A library from Amazon for Spark-native data quality checks. Deequ is implemented in Scala and provides constraint- and metric-based validation that runs well on large distributed datasets. If your pipelines are Spark-heavy and you prioritize performance at scale, Deequ is worth a look.

- Soda (Soda Core / Soda Cloud) — A lightweight scanner and observability tool that can run checks defined in YAML (SodaCL) and offers templated checks, monitoring, and alerting. Soda’s strength is a pragmatic approach to scanning and time-series monitoring of metrics with easy alert integrations.

Head-to-head comparison: what matters to engineering teams

Picking a tool is about matching features to constraints: pipeline technologies, team experience, scale, governance needs, and whether you want a heavy UI or prefer code-first checks. Below are the most important comparison dimensions.

1. Validation model and expressiveness

Great Expectations shines at expressive, human-readable expectations: completeness, uniqueness, value ranges, custom checks, and complex expectations composed from simpler ones. Telm.ai summarizes common GE metrics like completeness, uniqueness, timeliness, validity, and consistency (Telm.ai overview).

Deequ focuses on declarative constraints and statistical metrics (e.g., approximate quantiles, distribution comparisons) that are efficient in Spark. Soda provides a templated, YAML-driven approach that covers common checks quickly but can be less flexible for very bespoke validations.

2. Scalability and runtime

If your workloads run on Spark and you need checks to scale with big tables, Deequ’s Spark-native implementation gives it an edge. Great Expectations has Spark integration too, but Deequ is engineered specifically for distributed computation.

Soda is lightweight and can scan tables efficiently, but for very large datasets you’ll want to plan where scans run (e.g., within your cluster) and how frequently you scan to control costs.

3. Observability, alerting, and documentation

Soda and Soda Cloud emphasize observability and alerting with templated monitors, time-series metric storage, and integrations for alerting. Great Expectations excels at documentation — auto-generated “data docs” explain expectations and test results in rich detail. ThoughtWorks highlights the value of integrating monitoring and alerting to ensure continuous data product health (ThoughtWorks article).

4. Configuration and developer experience

Great Expectations encourages validation-as-code: write expectations in Python (or YAML for some workflows), store them in version control, and run them in CI. Soda uses SodaCL (YAML) for quick, consistent configuration across teams. Deequ is code-first (Scala/Python), which is ideal for Spark engineers but can be less approachable for smaller teams without Scala skills. A Medium comparative analysis highlights these distinctions and suggests choosing tools based on pipeline complexity and team expertise (Medium comparison).

5. Community, maturity, and integrations

Great Expectations has a strong open-source community and many integrations with data platforms. Deequ benefits from Amazon’s backing and a focused niche in Spark. Soda has gained traction for observability-first use cases and offers both open-source and commercial components for easier monitoring setup. Reviews and blog posts across the ecosystem point to complementary strengths and common practice of combining tools — for example, using Great Expectations for complex expectations and Soda for continuous monitoring (ThoughtWorks article).

When to choose which tool

- Great Expectations — Choose GE when you want readable, version-controlled expectations, strong documentation, and a flexible Python-first developer experience. Great for teams focused on validation-as-code and governance.

- Deequ — Choose Deequ when your processing is Spark-based and you need scalable, statistically robust checks on very large datasets.

- Soda — Choose Soda when you want quick scanning, templated checks, and an observability-focused workflow with built-in alerting. Soda is often chosen for monitoring production data and lightweight scanning.

Practical strategy to implement data quality testing

- Inventory critical datasets and identify business rules (start with the KPIs that matter most).

- Define a minimal set of checks: completeness, uniqueness, range/value validity, and schema checks.

- Add distribution and anomaly checks for model inputs or key metrics.

- Implement validation-as-code for development and CI (Great Expectations is a natural fit here).

- Set up continuous monitoring with templated scans and alerting (Soda or Soda Cloud works well).

- For big data or Spark-first pipelines, implement heavy-data checks with Deequ and export metrics to your observability layer.

- Automate incident playbooks that link alerts to remediation steps and owners.

Common pitfalls to avoid

- Running expensive full-table scans too often — use sampling, incremental checks, or metric-level monitoring.

- Writing brittle expectations that fail on normal, benign drift — favor tolerances and statistical checks where appropriate.

- Not storing test history — historical metrics help detect gradual drift versus one-off spikes.

Trends and what’s next in data quality tooling

The ecosystem continues to evolve toward observability and integration. Expect more hybrid approaches — validation-as-code combined with lightweight observability platforms, richer anomaly detection powered by time-series analytics, and better integrations into orchestration and alerting systems. Atlan and other observability-focused resources note growing support for templated checks and extensible test types as a major trend (Atlan overview).

There’s also a move to make checks more accessible to non-engineering stakeholders: simpler YAML configurations, auto-generated expectations from profiling, and clearer documentation so data consumers can understand what’s being validated.

FAQ

What is a data quality test?

A data quality test is an assertion that checks expected properties in data — for example, ensuring critical columns are not null, values fall into valid ranges, or distributions remain stable over time.

How do you test for data quality?

Define rules (expectations) for your data, implement them in pipelines or observability tools, and automate execution. Use validation-as-code in CI and monitoring tools for continuous checks in production.

What are common data quality checks?

Typical checks include completeness (no unexpected nulls), uniqueness (no duplicates), schema conformity (expected fields and types), validity (allowed values), timeliness, and distribution drift detection.

What are the 7 aspects of data quality?

The seven key aspects are accuracy, completeness, consistency, timeliness, validity, uniqueness, and integrity. Together, they form the foundation of reliable data quality programs.

What are the six data quality metrics?

Commonly tracked metrics include completeness, uniqueness, validity, timeliness, consistency, and accuracy. These dimensions help teams monitor and improve data reliability.

Choosing between Great Expectations, Deequ, and Soda isn’t about finding the “perfect” tool — it’s about matching tool strengths to your stack, scale, and team. Many teams find success combining tools: expressive, version-controlled expectations for development and CI, paired with lightweight, metric-driven monitoring in production. If you’d like help designing a strategy or integrating these tools into your data platform, we’re always happy to chat and nerd out about pipelines.