Containers have become the Swiss Army knife of modern data engineering — compact, flexible, and occasionally surprising you with a handy tool when you least expect it. But when your data pipelines need to scale, heal themselves, and play nicely across clouds, the choice between Docker and Kubernetes stops being academic and starts being strategic. In this guide you’ll learn what each tool actually does, when to use Docker alone, when to step up to Kubernetes, practical strategies for production data pipelines, and how to avoid the common potholes teams hit on the road to a resilient orchestration setup.

Why container orchestration matters in data engineering

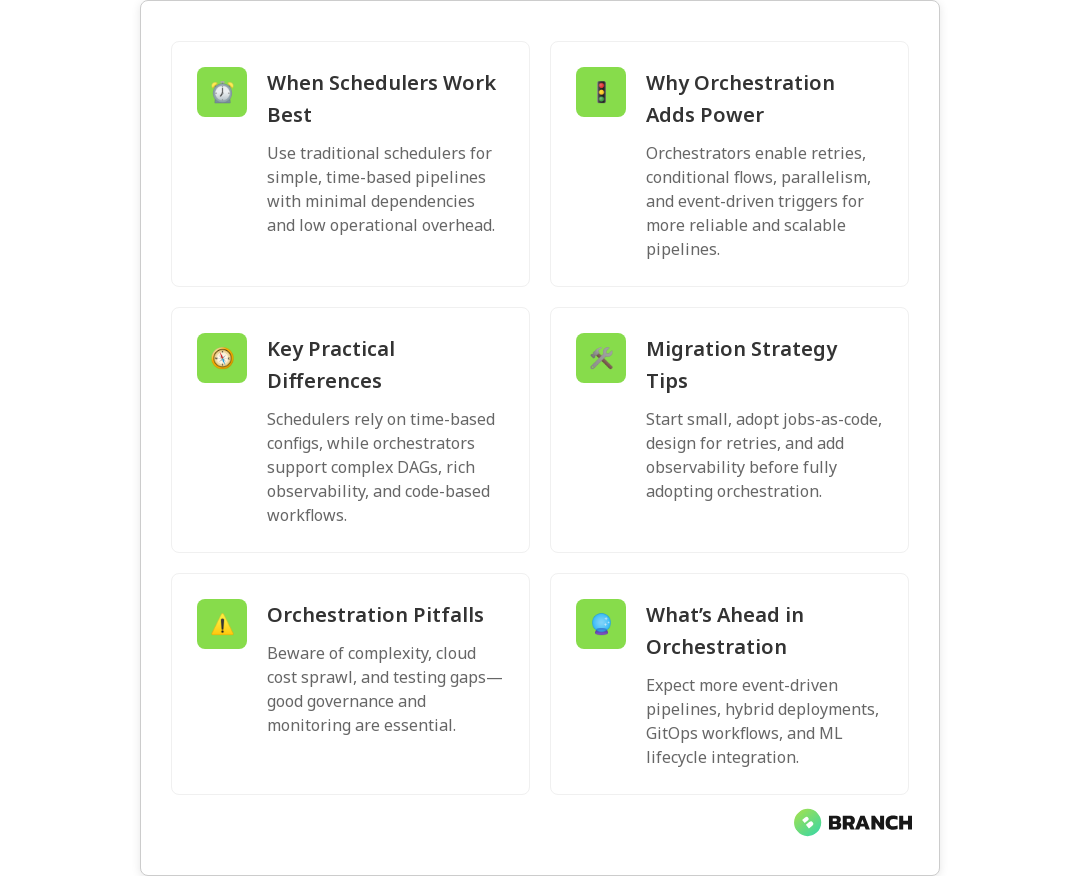

Data engineering workloads are no longer single, monolithic jobs that run on a VM and go to sleep. Modern pipelines are composed of many small services — extractors, transformers, model-serving endpoints, and observability agents — that must run reliably, scale with demand, and recover from failure automatically. This is where container orchestration comes in: it automates deployment, scaling, networking, and lifecycle management for containerized applications so you can focus on data logic instead of babysitting infrastructure.

For a practical, hands-on look at how virtualization, Docker, and Kubernetes tie into data engineering workflows, the Coursera course on virtualization, Docker, and Kubernetes is an excellent refresher.

Docker and Kubernetes — clarifying roles (they’re not direct competitors)

First, let’s clear a common misconception: Docker and Kubernetes serve different purposes. Docker is primarily a platform for building, packaging, and running containers. Kubernetes is an orchestration system for managing containers at scale across a cluster of machines. Think of Docker as the shipping container and Kubernetes as the port authority that coordinates thousands of containers arriving, docking, moving, and leaving.

The distinction is well summarized in practical guides like the Docker blog post on how Docker and Kubernetes work together and the Datacamp comparison article that breaks down roles and advanced Kubernetes features like self-healing and load balancing in clear terms (Datacamp: Kubernetes vs Docker).

Key functional differences

- Container creation and runtime: Docker builds images and runs single containers.

- Orchestration and cluster management: Kubernetes manages groups of containers across many nodes, handling scheduling, scaling, and recovery.

- Networking and service discovery: Kubernetes provides built-in load balancing and service abstractions suited to microservice and pipeline architectures.

- Persistence and state: Kubernetes integrates concepts like PersistentVolumes, StatefulSets, and Jobs for stateful workloads and batch jobs common in data engineering.

When to use Docker alone (short pipelines, local dev, simple CI)

Docker alone is great when your needs are simple. Use Docker for:

- Local development and testing of extract-transform-load (ETL) components.

- CI pipelines that build and run containers for unit and integration tests.

- Single-node processing or scheduled batch jobs where high availability and cluster-level scaling aren’t required.

If your pipeline is small, latency-insensitive, and managed through a single orchestrator (like a scheduler on a VM), Docker gives you repeatable environments with minimal operational overhead. It keeps things lightweight and fast to iterate.

When Kubernetes makes sense (scale, reliability, multi-service pipelines)

Choose Kubernetes when you need:

- Elastic scaling across many services or tenants.

- Self-healing so jobs restart automatically after node or Pod failures.

- Advanced networking, service discovery, and observability across components.

- Multi-cloud or hybrid deployments where portability and standardization matter.

Kubernetes shines in production environments where uptime, automation, and scale are priorities. If your data stack includes streaming systems, model-serving endpoints, and multiple microservices, Kubernetes provides the primitives to tie those pieces together reliably. For a beginner-friendly overview of orchestration options and portability, Splunk’s Container Orchestration guide is an approachable resource.

Hybrid approach: Docker + Kubernetes

In many real-world deployments you’ll use Docker to build images and Kubernetes to run them. CI/CD pipelines build Docker images, then Kubernetes handles rollout, scaling, and updates. This combo minimizes friction between development and operations while enabling production-grade orchestration.

Strategies for running containerized data pipelines

Here are practical strategies to make container orchestration work for data engineering, not against it:

- Design for idempotency and checkpointing. Data jobs should be restartable without duplicating results. Use checkpoints or metadata stores to track processed offsets or batches.

- Use Kubernetes Jobs and CronJobs for batch tasks. Kubernetes provides native primitives for one-off and scheduled jobs — ideal for nightly loads and backfills.

- Separate compute from storage. Keep state in managed object stores or databases, not container filesystems, so compute can scale independently.

- Automate observability. Integrate metrics, logs, and tracing early. Kubernetes makes it easy to attach sidecar containers or agents for telemetry.

- Leverage managed services where appropriate. Managed Kubernetes (GKE, EKS, AKS) and cloud-native serverless containers can simplify operations while retaining portability.

Common challenges and how to overcome them

Adopting containers and Kubernetes adds complexity. Here are the frequent problems teams hit and practical fixes:

- Operational complexity: Kubernetes has a learning curve. Mitigate by using managed offerings, adopting GitOps practices, and investing in observability and runbooks.

- Resource contention: Misconfigured requests and limits can lead to noisy neighbors. Use resource quotas, vertical/horizontal autoscaling, and testing under load.

- Security and compliance: Containers introduce a supply chain. Harden images, scan for vulnerabilities, and apply network policies and RBAC.

- Stateful workloads: Running stateful services in Kubernetes requires PersistentVolumes and careful consideration of data locality and backups.

Trends and the future of orchestration in data engineering

The orchestration landscape keeps evolving. Here are trends to watch:

- More managed and opinionated services: Cloud providers continue to deliver managed orchestration and serverless containers that reduce operational burden.

- Tighter CI/CD and GitOps integration: Deployments are increasingly automated via declarative configs and GitOps workflows.

- Specialized orchestration for data workloads: Frameworks and operators are emerging that focus on ML pipelines, feature stores, and streaming topologies.

- Portability and standards: Kubernetes remains the common denominator for portability across cloud providers, reducing vendor lock-in concerns.

If you want a simple walk-through of Kubernetes adoption and why organizations are choosing it for reliability and security, MarutiTech’s guide on Kubernetes adoption provides practical context (MarutiTech: Kubernetes adoption).

FAQ

What is container orchestration?

Container orchestration is the automated management of containerized applications across clusters. It handles deployment, scaling, networking, health checks, and lifecycle management so apps run reliably without manual intervention.

How is Kubernetes different from Docker?

Docker is mainly for building and running containers. Kubernetes is an orchestration platform that manages containers at scale across multiple nodes. Teams typically use Docker to create images and Kubernetes to deploy and manage them in production.

What is Docker Swarm vs Kubernetes?

Docker Swarm is Docker’s built-in clustering and orchestration tool, simpler for small clusters. Kubernetes is more feature-rich and extensible, offering advanced scheduling, self-healing, and a large ecosystem, making it the dominant choice for complex workloads.

What is an advantage of using Kubernetes for container orchestration?

Kubernetes automates at scale: it provides self-healing, horizontal scaling, rolling updates, and service discovery. Together, these enable resilient, highly available pipelines without constant manual work.

What are the alternatives to Kubernetes?

Alternatives include Docker Swarm (simpler needs), Apache Mesos (large-scale systems), and serverless/container services like Google Cloud Run or AWS Fargate that abstract cluster management. Splunk’s overview provides a useful comparison.

Container orchestration is not a silver bullet, but when matched to your team’s needs and maturity, it gives data engineering teams the capacity to scale, recover, and deliver data products reliably. Start small, automate often, and remember: containers make reproducibility easy, orchestration makes reliability sustainable — and both together keep data pipelines moving without you having to fight fires at midnight.