Choosing a data modeling approach can feel a bit like choosing between two excellent coffees: both will wake you up and get the job done, but the flavor, strength, and ritual differ. In the data world, Medallion Architecture and Data Vault are two popular patterns with different goals and trade-offs. This article explains what each one is, where they shine, how they can work together, and how to pick the right path for your organization.

By the end you’ll understand the core differences, practical use cases, implementation tips, and common pitfalls — so you can recommend a strategy with confidence (and maybe a little style).

What is Medallion Architecture?

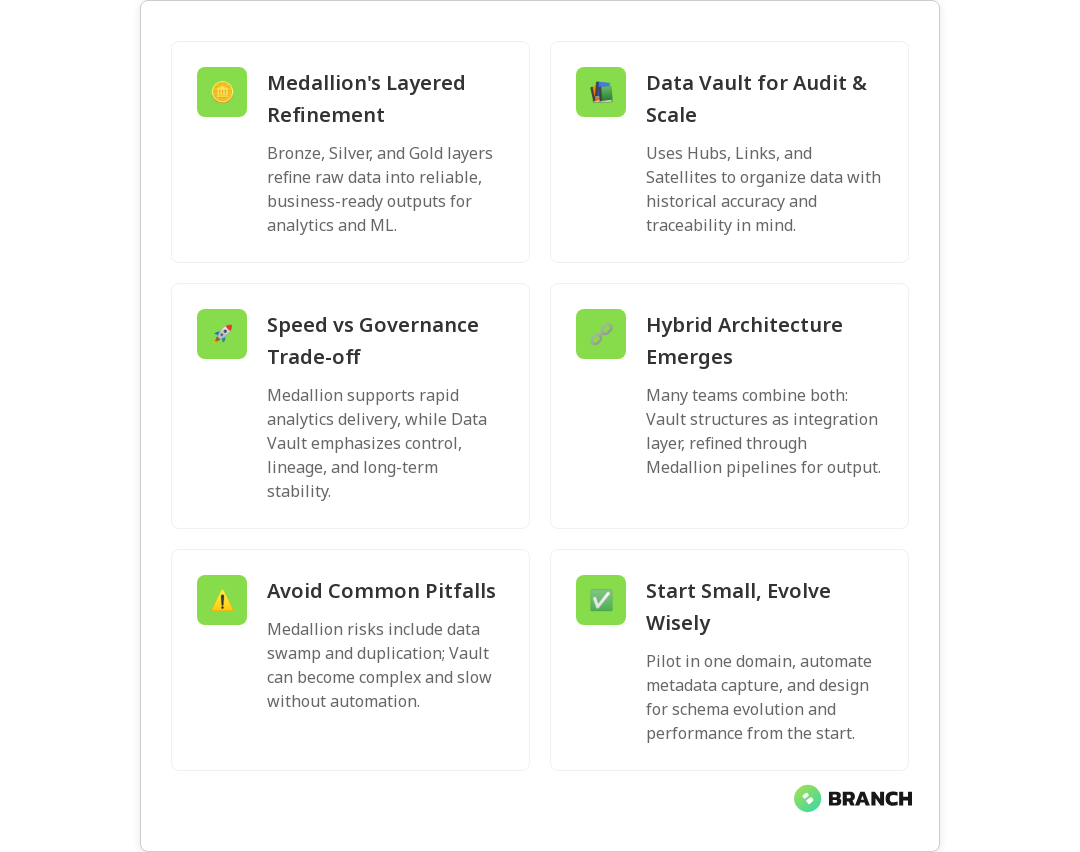

Medallion Architecture is a layered pattern for organizing data in modern lakehouses and data platforms. The basic idea is simple and elegant: move raw data through a series of refinement stages so it becomes trustworthy, performant, and ready for analytics or operational use. The common tiers are Bronze (raw ingestion), Silver (cleaned and joined), and Gold (business-ready, aggregated, or curated models).

This approach emphasizes ELT-style incremental refinement, pipelines that transform data as it moves from layer to layer, and a clear separation of concerns between raw storage and curated output. For a concise breakdown of these layers and their goals, see Databricks’ overview of the Medallion pattern (Databricks’ Medallion explainer).

When Medallion shines

- Speed of iteration: teams can rapidly build pipelines from Bronze to Gold.

- Simplicity: clear stages make it easy to define responsibilities and observability.

- Business-focused delivery: Gold tables map directly to reports, dashboards, or ML features.

What is Data Vault?

Data Vault is a modeling technique designed for scalable, auditable, and historically accurate data integration. Instead of designing around business-friendly tables, Data Vault breaks entities into Hubs (keys), Links (relationships), and Satellites (context and descriptive attributes). This separation makes it easier to ingest changing source systems and maintain a full-history, traceable store of what happened when.

Data Vault is intentionally schema-driven and normalization-friendly, which is useful in large organizations with many source systems or when regulatory auditability is required. For a practical perspective on how Data Vault compares to other canonical models, check Matillion’s comparison pieces that discuss Data Vault alongside star schemas and 3NF (Matillion on 3NF vs Data Vault).

When Data Vault shines

- Complex source environments: many systems with overlapping or changing keys.

- Regulatory and audit needs: full historical preservation and lineage are first-class features.

- Long-term scalability: schemas evolve without rewriting historical records.

Direct Comparison: How They Differ

Philosophy

Medallion is a pragmatic pipeline framework: raw to refined to business-ready. Data Vault is a modeling philosophy: build a scalable, auditable foundation that captures everything and evolves safely. Medallion focuses on stages and transformation flow; Data Vault focuses on structural separation and historical fidelity.

Design & Modeling

Medallion’s Silver and Gold layers often contain denormalized or dimensional models tuned for analytics performance. Data Vault prefers normalized components (Hubs/Links/Satellites) that make integration and historical tracking straightforward. That means Data Vault tends to have more tables/objects but fewer surprises when source systems change.

Speed vs Governance

Medallion is designed for fast iteration and delivering business-ready datasets quickly. Data Vault prioritizes governance, traceability, and robustness. If your team needs fast time-to-insight, Medallion can get you there. If you need long-term mastery of lineage and history, Data Vault is appealing.

Cost and Complexity

Data Vault can increase object-count and query complexity, which raises storage/compute and modeling overhead. Medallion’s curated Gold tables can be optimized for cost and performance, but that often requires repeated engineering effort to keep them aligned with changing business needs.

Hybrid: When Medallion and Data Vault Work Together

They don’t have to be rivals. Many modern implementations use Data Vault as the integration layer (capturing raw, historical truths) and the Medallion pattern to progressively refine that raw store into business-ready Gold outputs. In other words: Data Vault as the reliable, auditable Bronze/Silver foundation, then Medallion transforms for analytics and operational needs.

This hybrid is becoming more common in lakehouse platforms and cloud fabrics. For examples of blending the two — including the idea of a Business Vault for abstraction — see Trifork’s take on modern data warehouse patterns and an exploration of the combined approach in Microsoft Fabric (Trifork on modern data warehouses and Datavault + Medallion in Fabric).

Practical hybrid workflow

- Ingest source events into Bronze: raw files or change-data-capture streams.

- Model Hubs/Links/Satellites in a Vault-style raw layer for lineage.

- Build Silver curated tables that join vault components into cleaned domain models.

- Create Gold aggregates, dimensional models, or feature stores for consumption.

Implementation Guidance: Practical Checklist

- Define your goals first: speed, governance, or both? Let that guide architecture.

- Start small: pick one domain for a pilot that exercises ingestion, lineage, and consumption.

- Automate metadata and lineage capture: both approaches benefit from strong observability.

- Monitor cost & performance: normalized vault layers can be query-intensive; use materializations or caching for heavy reads.

- Plan for evolution: schema drift, key changes, and new sources are inevitable — design for them.

Common Challenges & How to Avoid Them

Medallion pitfalls

- Lack of governance: Bronze can become a data swamp without metadata and retention policies.

- Duplication: multiple Gold tables for similar needs can cause maintenance drift.

- Hidden lineage: transformations across layers need clear provenance to be trustworthy.

Data Vault pitfalls

- Complexity explosion: many small tables can be overwhelming without automation.

- Performance: direct BI queries over vault structures may be slow—use downstream materializations.

- Adoption gap: business users often prefer dimensional models, so plan for downstream translation.

Trends & The Road Ahead

Cloud-native lakehouses and managed platforms are enabling hybrid patterns to flourish — think Delta Lake, Snowflake, or Fabric-style environments where Data Vault captures raw history and Medallion pipelines produce business-facing models. Tooling for automation, metadata management, and SQL-first transformation frameworks continues to mature, lowering the operational cost of both approaches (Matillion comparison).

Expect better integrations between modeling frameworks, more robust metadata ecosystems, and stronger templates for hybrid vault-medallion patterns as best practices converge.

FAQ

What are the 4 types of data modeling?

Commonly referenced types include conceptual, logical, physical, and dimensional models. Conceptual captures high-level entities and relationships; logical defines structure without platform specifics; physical maps designs to database tables and indexes; dimensional organizes data for analytics using facts and dimensions.

What are the three levels of data modeling?

The three canonical levels are conceptual, logical, and physical. Conceptual focuses on business concepts, logical on normalized structures and relationships, and physical on storage in a database. Dimensional models are often considered a specialization tailored for analytics.

What is a data modeling tool?

A data modeling tool is software that helps design, visualize, and maintain data models, from ER diagrams to schema evolution. They often integrate with metadata catalogs and generate DDL or migration scripts to manage complexity, enforce standards, and support collaboration.

What is data modeling in SQL?

Data modeling in SQL means designing table schemas, keys, constraints, and relationships for relational databases or warehouses. It includes defining column types, indexes, and query patterns to ensure performance and correctness, often implemented via SQL-first transformation tools.

What is meant by data modeling?

Data modeling is the practice of creating abstract representations of data structures and relationships to support applications, analytics, and governance. It aligns business concepts with technical implementation to ensure performance, consistency, and adaptability.

Final Thoughts

There’s no single “winner” in the Medallion vs Data Vault debate — only the right choice for your priorities. Medallion accelerates delivery and clarity for analytics; Data Vault gives you a durable, auditable foundation for complex, evolving source environments. Many teams find the sweet spot in a hybrid approach: capture everything in a Vault-style raw layer, then refine via Medallion pipelines into business-ready outputs.

Pick based on constraints, pilot wisely, automate metadata, and remember: the goal is useful, trusted data — not model purity. If you want help designing a pragmatic, production-ready approach that balances speed, governance, and cost, experts (like us) can help architect a strategy tailored to your needs.