Think of stream processing as the live sports broadcast of data: it delivers plays as they happen, not after the final buzzer. This article explains what stream processing is, why it matters for modern businesses, and how to decide when to choose streaming over traditional batch processing. You’ll get practical patterns, trade-offs to watch for, and a short checklist to help you move from theory to action without pulling your hair out.

Why stream processing matters

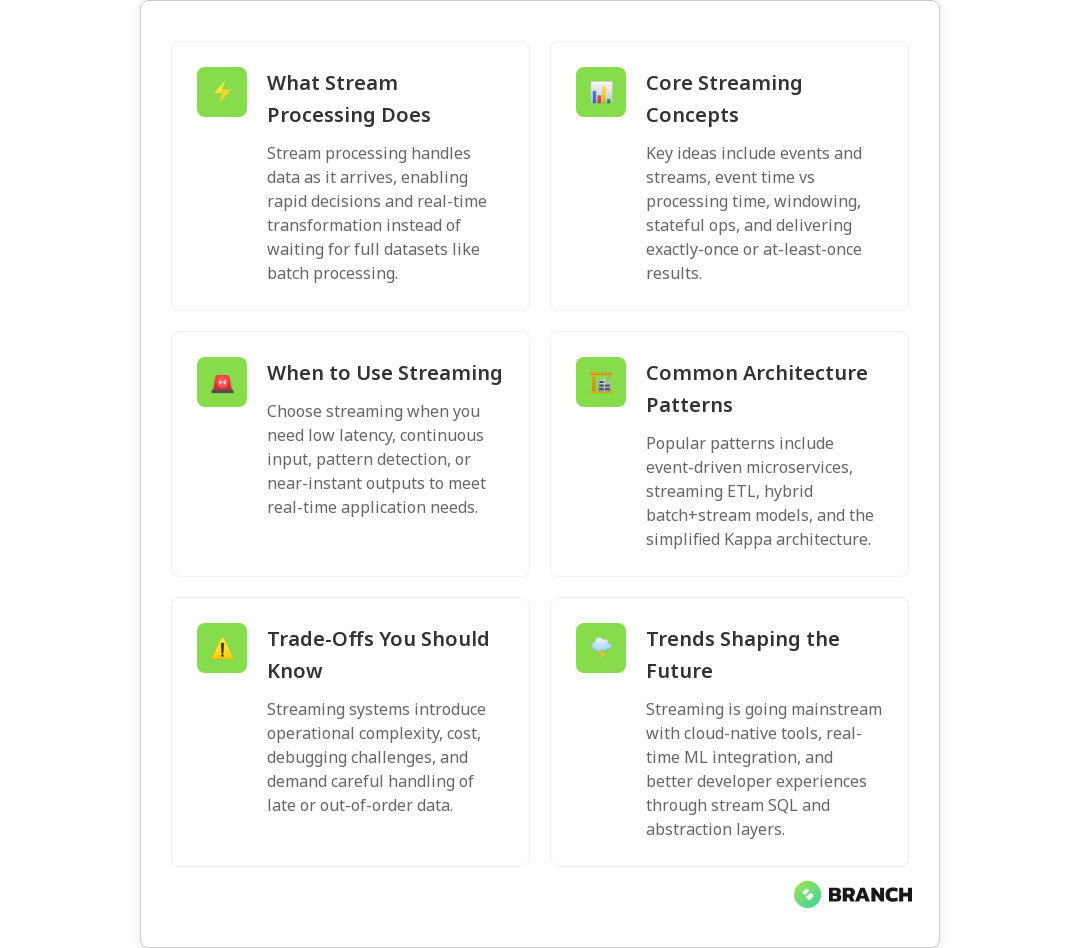

Data used to arrive in neat piles — nightly ETL jobs, weekly reports, monthly reconciliations — and batch processing was the unquestioned hero. But businesses increasingly need immediate, continuous insight: fraud alarms the moment a transaction looks suspicious, product recommendations that update as a visitor browses, or sensor alerts from connected equipment. Stream processing turns incoming events into timely decisions, enabling lower latency, better customer experiences, and faster operational response.

For a concise primer on what stream processing entails, Confluent’s introduction is a clear, friendly resource that highlights real-time transformation and common use cases like fraud detection and recommendations.

Core concepts (the parts that make streaming feel like magic)

Here are the foundational ideas you’ll see again and again when working with streams:

- Events and streams: A stream is an append-only sequence of events (user clicks, sensor readings, log lines). Processing happens continuously as events arrive.

- Processing time vs event time: Processing time is when the system sees the event; event time is when the event actually happened. Handling late or out-of-order events requires thinking in event time.

- Windowing: Windows group events into time-bounded sets (sliding, tumbling, session windows) so you can compute aggregates over meaningful intervals.

- Stateful vs stateless: Some operations (simple transforms) are stateless; others (counts, joins, sessionization) require keeping state across events.

- Delivery semantics: At-least-once, at-most-once, and exactly-once delivery affect correctness and complexity of downstream logic.

When to use stream processing over batch

You don’t always need streaming. Batch is still the right tool for a lot of jobs. Use streaming when latency and continuous updates are core requirements. Here’s a practical decision guide:

- Latency matters: If decisions or user experiences depend on sub-second to minute-level freshness (fraud detection, live personalization, operational alerts), pick streaming.

- Continuous input: If data arrives continuously and you need ongoing computation rather than periodic snapshots, streaming fits better.

- Incremental computation: If you can maintain and update results as events come in rather than recomputing whole datasets, streaming is usually more efficient.

- Complex event patterns: Correlating patterns across events over time (like detecting sequences of suspicious actions) favors streaming.

- Downstream SLAs: If systems downstream expect near-real-time updates or push notifications, stream-first architectures simplify the flow.

For a side-by-side comparison, GeeksforGeeks provides a straightforward look at batch versus stream processing, including how to choose based on latency needs.

Common streaming architecture patterns

There are a few patterns that keep showing up in real projects. Pick the pattern that best fits your operational constraints and team skills.

- Event-driven microservices: Services react to streams of domain events. Good for decoupling and scalability.

- Kappa-style architecture: Treat everything as a stream — even what you used to do in batch — simplifying the stack and avoiding sync between batch and streaming layers.

- Streaming ETL: Ingest, transform, and route data in real time, then store final or aggregated results in databases or data lakes for serving and analytics.

- Hybrid (batch + stream): Use streaming for time-sensitive, incremental updates and batch for heavy historical reprocessing or large-scale model training.

Splunk’s overview on stream processing does a great job of explaining how continuous ingestion and low-latency insight drive these architectures in fields like finance and IoT.

Trade-offs and challenges (the not-so-fun but necessary bits)

Streaming brings power, but also complexity. Expect to trade simplicity for speed in several areas:

- Operational complexity: Stateful processors, checkpointing, and managing exactly-once semantics require more operational thought than simple batch jobs.

- Testing and debugging: Reproducing errors in a continuously running system can be trickier than replaying a batch job.

- Cost model: Continuous compute and storage for state can be more expensive than periodic batch runs — but the business value often justifies it.

- Data correctness: Handling late-arriving or out-of-order events and ensuring idempotent updates take careful design.

Implementation checklist — practical steps to get started

Here’s a short checklist to move from “we should do streaming” to “we’re reliably running streams in production.”

- Define business SLAs: What freshness and correctness guarantees do you need? This controls technology and architecture choices.

- Model events: Design clear, versioned event schemas and plan for schema evolution.

- Choose your processing model: Stateless transforms vs stateful windowed computations — choose frameworks that support your needs.

- Plan for delivery semantics: Decide whether at-least-once is acceptable or if you need exactly-once processing and pick tooling that supports it.

- Build observability: Expose metrics, tracing, and retention policies; keep logs replayable so you can reprocess historical data.

- Test with production-like data: Simulate out-of-order and late events, run chaos tests for backpressure and failures.

- Deploy with CI/CD: Automate deployments and include migration/rollback plans for stateful processors.

When batch still wins

Batch processing isn’t dead. It’s efficient and simpler for many workloads:

- Large-scale historical analytics, machine learning training on full datasets, and periodic reporting are great batch candidates.

- If your business is okay with hourly or daily freshness, batch reduces complexity and cost.

- Use batch when reproducibility (rebuilding everything exactly from raw data) and peak cost efficiency are primary goals.

GeeksforGeeks provides a helpful side-by-side view so you can map your specific needs to the right approach.

Trends and what’s next

Streaming is moving from niche to mainstream. Key trends to watch:

- Cloud-native streaming: Managed platforms reduce operational burden and make streaming accessible to teams without deep ops expertise.

- Convergence of analytics and operational systems: Real-time ML inference and feature updates mean streaming is increasingly part of ML workflows.

- Better developer ergonomics: Higher-level stream SQL and stream-first frameworks let product teams work faster without sacrificing correctness.

Redpanda’s fundamentals guide and Splunk’s blog both emphasize that real-time insights and operational responsiveness are central to modern businesses’ competitive advantage.

FAQ

What do you mean by stream processing?

Stream processing is the continuous ingestion, transformation, and analysis of data as events arrive, rather than waiting for a complete dataset. It enables low-latency computations like rolling aggregates, pattern detection, and real-time transformations so systems can act on data immediately.

Why is stream processing important?

Many modern business problems require immediate action or continuous updating: fraud prevention, live personalization, and operational monitoring. Stream processing reduces decision latency and can provide near-instant insights that batch systems can’t deliver in time.

Is stream processing real-time?

“Real-time” can mean different things depending on context. Stream processing enables near-real-time or real-time behaviors (sub-second to seconds latency), but actual latency depends on system design, infrastructure, and processing complexity. For details on common use cases and latency considerations, Confluent’s primer is a good practical resource.

How is stream processing different from traditional data processing?

Traditional (batch) processing collects data over a period, then processes it in bulk. Stream processing handles data continuously as it arrives. The difference shows up in latency, architecture complexity, state management, and cost profiles. Batch is easier to reason about and cheaper for infrequent jobs; streaming is necessary when timeliness and incremental updates matter.

What is the difference between batch and streaming dataflow?

Batch dataflow handles bounded sets of data with clear start and end times, whereas streaming dataflow processes unbounded, continuously growing datasets. Stream processing emphasizes windowing, event-time semantics, and incremental state updates; batch workflows focus on bulk operations, full recomputation, and scheduled runs.