Stream processing is no longer a niche topic for big data teams — it’s a core capability for businesses that want real-time analytics, responsive user experiences, and faster decision-making. If you’re evaluating Apache Beam and Kafka Streams, you’re asking the right question: both are powerful, but they solve overlapping yet distinct problems. In this article you’ll learn how each framework works, where they shine, the operational trade-offs, and practical guidance to help you pick the best fit for your project.

Why this decision matters

Choosing the right stream processing framework influences developer productivity, operational complexity, cost, and system behavior under failure. The wrong choice can mean expensive rework or architecture constraints that slow growth. We’ll break down the technical and business trade-offs so you can pick a framework that supports your product roadmap — not one that forces you to bend your requirements to its limitations.

High-level comparison: models and philosophies

At a glance, the two projects take different approaches:

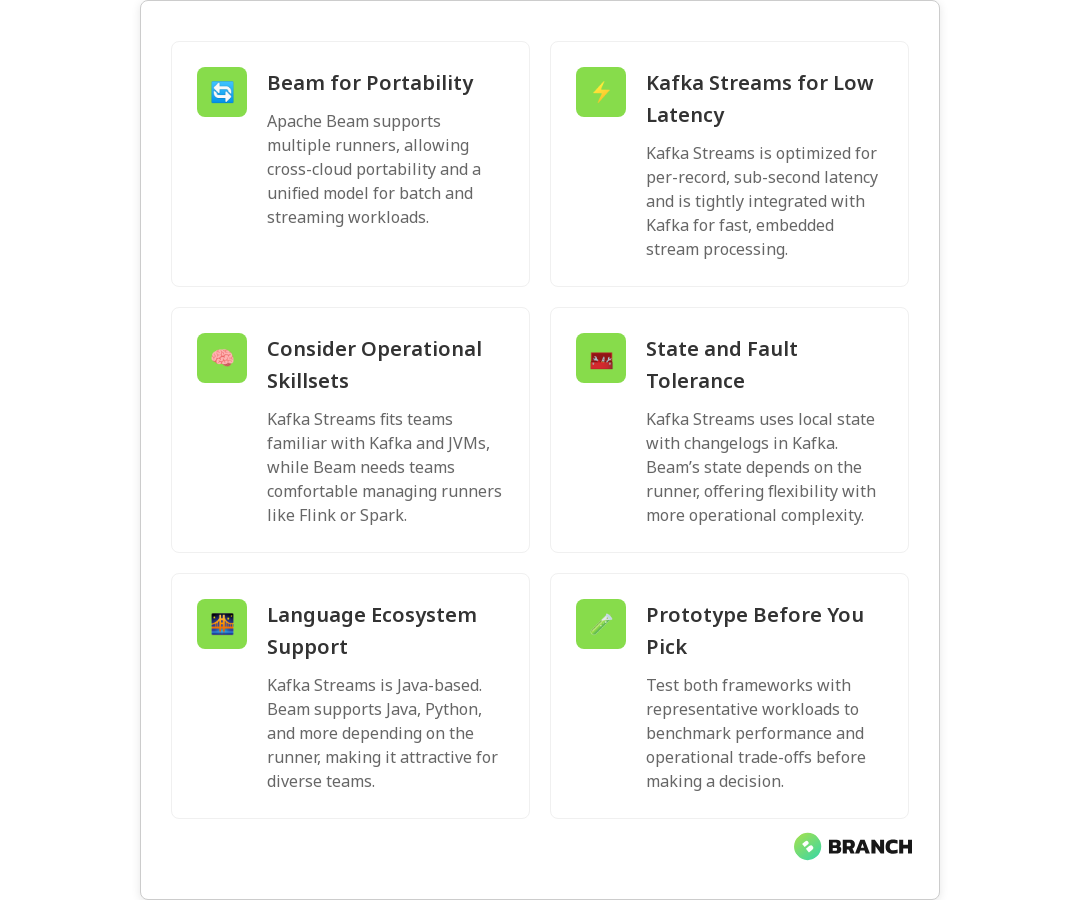

- Apache Beam is a unified programming model for both batch and streaming that runs on multiple execution engines (called runners) such as Flink, Spark, and Google Cloud Dataflow. It’s about portability and consistency across execution environments — write once, run anywhere (within supported runners) — which can be a huge win for teams anticipating changing infrastructure or cloud providers. See the Apache Beam overview for more background.

- Kafka Streams is a lightweight library specifically designed to process streams from Apache Kafka. It embeds processing in your application and optimizes for Kafka-native patterns — local state per instance, tight integration with Kafka’s consumer/producer model, and the kind of per-record latency modern applications need.

This difference — portability vs Kafka-native simplicity — is the axis that usually decides the choice.

Core technical differences

Programming model and portability

Apache Beam gives you a higher-level abstraction (PTransforms, windows, watermarks) that maps onto different runners. That means one Beam pipeline can be executed on Flink, Spark, or Dataflow without rewriting business logic, making Beam a great choice for teams that value portability or that work across clouds. The Confluent primer on Beam explains the unified model and runner flexibility in practical terms.

Kafka Streams, by contrast, is a library you embed in JVM applications. It’s not portable in the Beam sense — it’s intentionally tied to Kafka and the JVM ecosystem, but that tight coupling brings simplicity and performance advantages for Kafka-centric stacks.

State management and fault tolerance

Both frameworks support stateful processing and fault tolerance, but they approach it differently. Kafka Streams stores state locally with changelog topics in Kafka for durability and automatic recovery. It’s a pragmatic, operationally straightforward approach for Kafka-based deployments.

Beam delegates execution to runners which provide state, checkpointing, and exactly-once guarantees depending on the chosen runner. If you pick Flink as the runner, you get Flink’s advanced state backends and checkpointing behavior. This gives Beam flexibility but also means you’re responsible for understanding the guarantees and operational model of the runner you choose.

Latency, throughput, and performance

If your priority is sub-second per-record processing with minimal overhead, Kafka Streams is often the better fit. Kafka Streams is optimized for Kafka-native use cases and excels at low-latency, lightweight stateful operations — think real-time transforms, aggregations, and enrichment with local state. One comparison of stream engines highlights Kafka Streams’ per-record latency strengths.

Beam’s performance depends on the runner; some runners (e.g., Flink) are competitive for low-latency workloads, while others may be better suited to high-throughput or batch-heavy pipelines. If raw latency is critical, measure with your expected workload and chosen runner — performance characteristics can vary significantly between environments.

Operational and developer experience

Both frameworks require operational competence, but their operational profiles differ.

- Kafka Streams: You operate Kafka and your application instances. Scaling is conventional — run more instances. Local state makes operations simple in many Kafka environments, and deployment integrates well with containerized or VM-based app infrastructure.

- Apache Beam: You also operate the chosen runner (Flink/Spark/Dataflow). This can mean more moving parts but also allows separation of concerns: Beam for logic, the runner for execution. If you’re using cloud managed runners (e.g., Dataflow), you offload some operational burden at a cost.

Ease of operations often comes down to the team’s skill set and infrastructure preferences. If your team already runs Kafka and JVM services comfortably, Kafka Streams may be the path of least resistance. If you’re standardizing on an execution engine or expect to run processes on multiple backends, Beam’s portability can reduce long-term complexity.

Use cases: when to pick each

Pick Kafka Streams when:

- Your architecture is Kafka-centric and you want simplicity and low-latency per-record processing.

- You prefer embedding processing logic directly in services rather than managing a separate stream processing cluster.

- Your language and ecosystem are JVM friendly (Java, Scala, Kotlin).

- You need lightweight stateful operations that rely on Kafka for durability.

Pick Apache Beam when:

- You need a unified model for both batch and streaming workloads and want to run pipelines on different runners over time.

- You anticipate changing execution environments or cloud providers and want portability.

- Your team values a higher-level abstraction for complex event-time and windowing semantics.

- You want to leverage runner-specific strengths (e.g., Flink’s stream processing features or Dataflow’s managed operations).

Common challenges and trade-offs

No framework is perfect. Here are common trade-offs to weigh:

- Complexity vs control: Beam offers more abstraction and portability but can introduce complexity when debugging or tuning across different runners. Kafka Streams is simpler but less portable.

- Operational burden: Running Beam on an unmanaged Flink cluster means extra ops work; managed runners reduce that but add cost and potential vendor lock-in.

- Language support: Kafka Streams is JVM-based; Beam has SDKs in Java, Python, and other languages depending on runner support. If your team uses Python heavily, Beam may be more attractive.

- Performance nuances: Throughput and latency depend heavily on topology, state size, and runner configurations — don’t assume one framework will always outperform the other. Compare real-world tests like this streaming comparison to validate expectations.

Trends and ecosystem considerations

Stream processing ecosystems continue evolving. The move toward serverless and managed services for streaming (like managed runners) reduces operational complexity. At the same time, Kafka itself is broadening its ecosystem, and hybrid approaches (using Kafka for ingestion and Beam or Flink for heavy processing) are common.

Community support, active development, and integration with cloud-native tooling are practical factors. Articles comparing engines note that Beam’s ability to target different runners is a strategic advantage for multi-cloud architectures, while Kafka Streams remains compelling for single-provider Kafka-first stacks.

Decision checklist: quick questions to guide your choice

- Is Kafka already the backbone of your data platform? If yes, Kafka Streams is a natural fit.

- Do you need portability across execution engines or clouds? If yes, lean toward Apache Beam.

- Is low per-record latency and JVM-native integration critical? Kafka Streams likely wins.

- Does your team prefer higher-level abstractions for event-time semantics and complex windowing? Beam provides these features.

- What operational resources and expertise do you have? Managed runners vs self-hosted apps is an important operational trade-off.

Practical migration tip

If you need both portability and Kafka-native performance, consider a hybrid strategy: use Kafka Streams for the low-latency front line and Beam for heavier, multi-runner analytics pipelines. This lets you optimize for latency where it matters and maintain flexible, portable analytic pipelines for reporting and batch workloads.

FAQ

What do you mean by stream processing?

Stream processing is the continuous, real-time handling of data as it flows through a system. Instead of processing data in scheduled batches, stream processing reacts to each event (or small groups of events) immediately, enabling live analytics, alerts, and real-time transformations.

Why is stream processing important?

Stream processing enables businesses to act on data instantly — think fraud detection, personalization, live metrics, or operational monitoring. It reduces time-to-insight, improves user experiences, and enables new product capabilities that aren’t possible with batch-only processing.

How is stream processing different from traditional data processing?

Traditional (batch) processing collects data over a window of time and processes it in bulk. Stream processing processes events continuously as they arrive, often with stricter latency and state consistency requirements. Stream processing also emphasizes event-time semantics (handling late or out-of-order events) and windowing.

What is a stream processing framework?

A stream processing framework is software that provides the abstractions and runtime for processing continuous data streams. It handles details like event-time processing, windows, state management, fault tolerance, and scaling so developers can focus on business logic. Examples include Apache Beam (with runners), Kafka Streams, Flink, and Spark Structured Streaming.

What are the capabilities of stream processing?

Common capabilities include event-time windowing, stateful processing, exactly-once or at-least-once delivery semantics, fault tolerance, scalability, and integrations with messaging systems and storage. Different frameworks emphasize different capabilities — for example, Beam prioritizes portability and unified batch/stream APIs, while Kafka Streams prioritizes Kafka-native low-latency processing.

Final thoughts

There’s no universally “right” answer between Apache Beam and Kafka Streams. If your world revolves around Kafka and you need low-latency, JVM-native processing with straightforward operations, Kafka Streams will likely get you the fastest path to production. If you value portability, want a unified batch-and-stream API, or need to target multiple execution backends, Apache Beam is the better long-term bet. The smart move is to prototype, measure, and align the choice with your team’s skills and your business goals.

If you’d like help evaluating, building, or operating your streaming pipeline, we design tailored solutions that balance engineering trade-offs with business outcomes — and we promise to explain our choices without too much jargon (or too many metaphors involving rivers and pipelines).

For additional technical comparisons and practical overviews referenced in this article, see the Confluent Apache Beam introduction, a comparative guide of stream processing frameworks, and recent engine comparisons that examine latency and throughput trade-offs.

Useful external references: Apache Beam overview, stream processing framework guide, engine comparison, and detailed Kafka vs Beam comparison.