Most B2B leaders know they should document their IT systems but few realize just how much poor documentation is costing them. Beyond the obvious frustrations of hunting down passwords or trying to understand legacy code, inadequate IT documentation creates hidden inefficiencies that compound over time, especially as teams grow and technology stacks become more complex.

Whether you’re managing a startup’s first engineering hire or overseeing digital transformation at an established company, the quality of your IT documentation directly impacts onboarding speed, operational reliability, and your ability to scale without chaos. Yet many organizations treat documentation as an afterthought, documenting only when problems arise rather than building it into their workflows from the start.

This article explores why IT documentation deserves more strategic attention than most businesses give it, when to invest in different types of documentation, and how to avoid the common pitfalls that turn documentation from a productivity tool into a burden.

The Hidden Costs of Poor IT Documentation

The real impact of inadequate documentation often doesn’t surface until teams are under pressure. A well-funded startup with 60 engineers can still struggle with basic onboarding because no one documented how systems actually work. New developers spend weeks figuring out codebases that should take days to understand, while senior engineers get pulled into constant “how does this work?” conversations instead of building new features.

These costs compound quickly across several areas:

- Extended onboarding cycles: New team members take 2-3x longer to become productive when systems aren’t documented

- Increased technical debt: Undocumented decisions get repeated or contradicted, leading to inconsistent implementations

- Operational brittleness: Critical knowledge lives in individual heads rather than accessible systems

- Scope creep and miscommunication: Stakeholders make different assumptions about how systems work or what’s possible

- Security vulnerabilities: Undocumented access patterns and dependencies create blind spots in security reviews

For managed IT service providers and their clients, these issues are particularly acute. When multiple teams or vendors need to work together, missing documentation doesn’t just slow down individual contributors it breaks entire workflows and erodes trust between stakeholders.

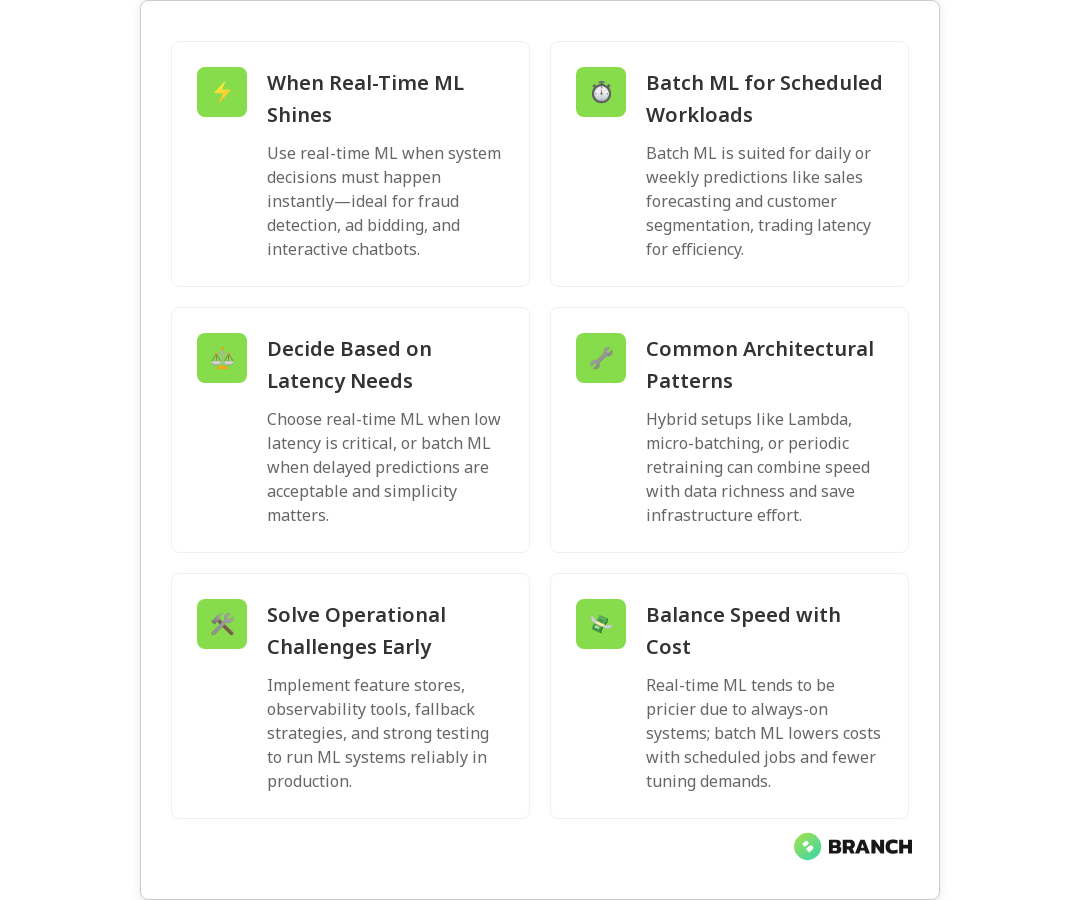

Understanding Documentation Types and When They Matter

Not all documentation serves the same purpose, and over-documenting can be just as problematic as under-documenting. The key is matching documentation type and depth to actual business needs and usage patterns.

| Documentation Type | Primary Purpose | Best For | Update Frequency |

|---|---|---|---|

| System Architecture | High-level system relationships and data flows | Technical strategy, security reviews, vendor coordination | Quarterly or after major changes |

| API Documentation | Interface specifications and usage examples | Integration work, third-party development | With each API release |

| Operational Runbooks | Step-by-step procedures for common tasks | Incident response, routine maintenance, onboarding | Monthly or as processes change |

| Code Documentation | Inline explanations of complex logic or decisions | Developer productivity, maintenance, debugging | Continuous with development |

| Business Process Maps | How technology supports business workflows | Stakeholder alignment, requirements gathering | Annually or with process changes |

The most effective documentation strategies focus on creating just enough structure to support actual workflows without becoming bureaucratic overhead. This means understanding who will use each type of documentation and in what contexts.

Read more: How DataOps principles improve collaboration and consistency across technical teams.Building Documentation That Actually Gets Used

The biggest documentation failures happen when teams create comprehensive documents that nobody maintains or references. Sustainable documentation requires thinking about incentives, workflows, and maintenance from the start.

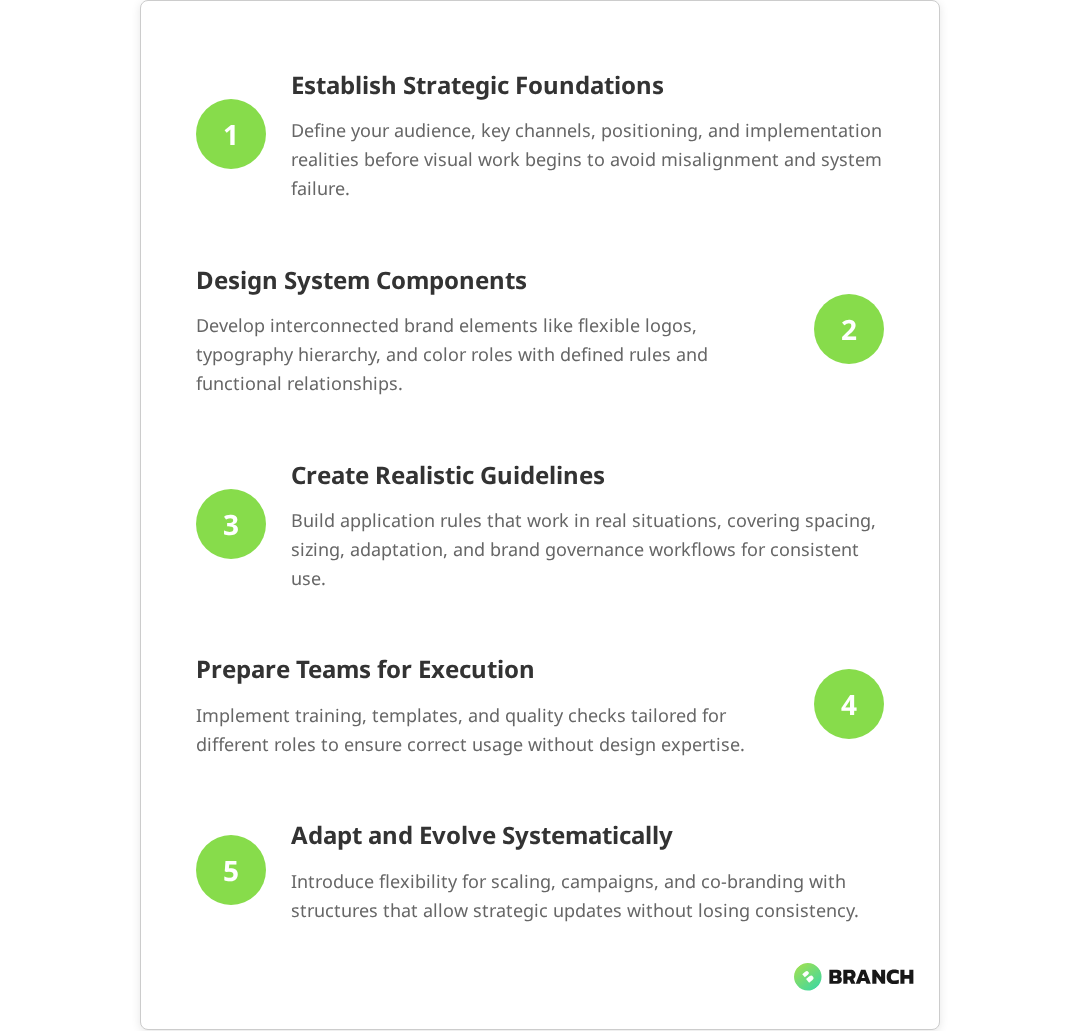

Start With Stakeholder Context, Not Technical Details

Effective IT documentation begins with understanding the business context and stakeholder needs, not with technical specifications. When non-technical decision-makers can understand why systems are designed certain ways, they’re more likely to support the documentation process and make informed decisions about changes.

This approach is particularly valuable during product discovery and early development phases, when documentation serves as a shared language between technical and business stakeholders. Rather than diving into implementation details, start with questions like:

- What business problems does this system solve?

- Who are the key users and what are their workflows?

- What are the critical dependencies and failure points?

- How does this integrate with existing systems and processes?

Create Templates That Scale With Project Size

One-size-fits-all documentation approaches often fail because they either overwhelm small projects or provide insufficient structure for complex ones. Consider developing different documentation templates based on project scope:

- Small initiatives: Single-page overview with architecture diagram, key decisions, and contact information

- Medium projects: Structured documentation covering requirements, architecture, deployment, and maintenance procedures

- Large systems: Comprehensive documentation with detailed technical specifications, operational procedures, and governance frameworks

This tiered approach helps teams avoid the paralysis that comes from trying to create enterprise-grade documentation for every small feature or fix.

What the research says

Industry research and best practice studies reveal several key insights about IT documentation effectiveness:

- Documentation quality directly correlates with team productivity. Organizations with well-maintained documentation report 40-50% faster onboarding times and fewer operational incidents.

- Visual documentation elements are significantly more effective than text-only approaches. Architecture diagrams and process flows are retained and referenced at much higher rates than lengthy written specifications.

- Documentation maintenance requires dedicated ownership and processes. Teams that assign specific documentation ownership and establish regular review cycles see 3x higher documentation accuracy rates.

- Context-driven documentation strategies outperform comprehensive approaches. Early evidence suggests that targeting documentation to specific user needs and workflows provides better ROI than attempting to document everything comprehensively, though more research is needed on optimal documentation scope and depth.

Documentation as a Strategic Tool, Not Just a Technical Requirement

The most sophisticated organizations treat documentation as a strategic tool for reducing risk and enabling growth, not just a technical requirement. This perspective shift changes how documentation gets prioritized and funded.

Risk Mitigation and Knowledge Management

From a business continuity perspective, documentation serves as insurance against key person risk. When critical system knowledge exists only in individual heads, organizations face significant vulnerability if those people leave or become unavailable. This is especially problematic for managed IT service providers in Long Island working across multiple client environments.

Well-structured documentation also supports better security and compliance outcomes. Data lineage and governance requirements often mandate clear documentation of how information flows through systems, making documentation a compliance necessity rather than just a best practice.

Enabling Faster Decision-Making

When stakeholders can quickly understand how systems work and what changes might impact, they can make decisions faster and with more confidence. This is particularly valuable during digital transformation projects, where understanding current state architecture is essential for planning future state improvements.

Documentation also supports more effective vendor management. When organizations can clearly articulate their current systems and requirements, they can evaluate potential partners more effectively and set clearer expectations for project outcomes.

Common Documentation Pitfalls and How to Avoid Them

Even well-intentioned documentation efforts can become counterproductive if they fall into common traps. Understanding these pitfalls helps organizations create more sustainable documentation practices.

Over-Documentation That Slows Down Teams

Some organizations react to documentation problems by creating extensive documentation requirements that slow down development and create maintenance overhead. This approach often backfires, especially in fast-moving startup environments where agility is critical.

The key is distinguishing between decision documentation (why choices were made) and implementation documentation (how things currently work). Decision documentation has longer-term value and changes infrequently, while implementation documentation needs to stay current with code changes.

Fragmented Documentation Across Multiple Systems

When documentation lives in multiple tools and formats, it becomes difficult to maintain consistency and find information when needed. This fragmentation often happens organically as teams adopt different tools, but it creates significant friction over time.

Consider establishing a single source of truth for each type of documentation, with clear ownership and update responsibilities. This doesn’t mean everything needs to live in one tool, but it does mean having intentional choices about where information lives and how it stays synchronized.

Documentation Without Clear Ownership or Update Cycles

Documentation that nobody owns inevitably becomes stale and unreliable. The most effective documentation strategies assign clear ownership and establish regular review cycles, treating documentation maintenance as an operational requirement rather than an optional activity.

This is particularly important for systems that evolve frequently, where outdated documentation can actually be more harmful than no documentation at all.

When to DIY vs. When to Bring in Documentation Specialists

Most organizations can handle basic documentation internally, but there are specific situations where bringing in external expertise makes sense.

Internal Documentation Scenarios

Teams should generally handle their own documentation when:

- Systems are well-understood by current team members

- Documentation needs are straightforward (API docs, basic runbooks)

- Teams have established workflows and tooling

- Changes happen frequently and require real-time updates

When External Help Makes Sense

Consider bringing in documentation specialists or consultants when:

- Legacy systems lack documentation and original builders are no longer available

- Multiple teams or vendors need to coordinate around complex system integrations

- Compliance requirements demand specific documentation formats or standards

- Organizations are planning major system migrations or modernization efforts

External teams can be particularly valuable for creating documentation frameworks and templates that internal teams can then maintain. They can also provide neutral perspectives on system architecture and help identify documentation gaps that internal teams might miss.

For organizations evaluating strategic technology consulting, documentation planning should be part of the conversation from the beginning. The best technology partners help establish documentation practices that outlast individual projects and support long-term organizational capability building.

Making Documentation Part of Your Technology Strategy

The most successful organizations treat documentation as an integral part of their technology strategy rather than a separate concern. This means considering documentation requirements during technology selection, budgeting for documentation work as part of project planning, and establishing documentation standards that support business objectives.

For organizations working with solution architecture services or planning custom software development, documentation standards should be established before development begins. This ensures that documentation becomes part of the development workflow rather than an afterthought.

Modern data observability and monitoring practices also depend heavily on well-documented systems. When teams understand how systems are supposed to work, they can more effectively identify when things go wrong and respond appropriately.

Organizations considering data strategy and architecture work should recognize that documentation becomes even more critical as data systems grow in complexity. The ability to trace data lineage, understand transformation logic, and document data quality expectations often determines whether data initiatives succeed or fail.

Whether you’re managing technology internally or working with external partners, treating documentation as a strategic capability rather than a compliance exercise pays dividends in reduced operational risk, faster onboarding, and more effective technology decision-making. The key is finding the right balance for your organization’s specific needs and growth stage.

FAQ

How much time should we budget for documentation in new software projects?

Plan for documentation work to represent 10-15% of total development effort for most projects. This includes requirements documentation during discovery, architecture documentation during design, and operational documentation during deployment. Frontload this investment early in the project lifecycle when creating shared understanding has the highest value.

What's the biggest mistake organizations make when trying to improve their IT documentation?

The most common mistake is trying to document everything at once, which overwhelms teams and creates maintenance burdens. Instead, start with the highest-impact documentation usually system architecture diagrams and operational runbooks then expand gradually based on actual usage patterns and feedback.

How do we keep documentation current without slowing down development?

Focus on documenting decisions and architecture rather than implementation details, since those change less frequently. Use automation where possible for implementation documentation, and establish clear ownership with regular review cycles. Treat documentation updates as part of the definition of done for development work.

Should we standardize on a single documentation tool across the organization?

Standardization helps with discoverability and maintenance, but don't sacrifice functionality for consistency. Choose tools that integrate well with your development workflows and support the specific types of documentation you need most. The key is having intentional choices about where information lives and how it stays synchronized.

When does it make sense to hire external help for documentation projects?

External specialists are most valuable when you're dealing with legacy systems that lack documentation, planning major system migrations, or need to establish documentation frameworks for the first time. They can also provide neutral perspectives on complex system integrations where multiple teams or vendors need to coordinate effectively.